A recursive neural network (RNN) is a type of artificial neural network where connections between nodes form a directed graph along a sequence. This makes it possible for RNNs to exhibit temporal dynamic behavior for a time period beyond the input sequence. RNNs can be used to model time series or sequence data, such as text, and have been successful in a variety of tasks, such as language modeling, machine translation, and image captioning.

A Recursive Neural Network is essentially a deep neural network designed to detect abnormal events. As a result, you can expect to get structured predictions by using the same number of weights on structured inputs. In nature, networks like these do not have linearity. The models can learn deep structured erudition thanks to their adaptive nature. Deep Learning is one of several key technologies used to control voice commands in mobile devices such as Alexa voice-enabled speakers. Deep learning, which we pioneered, allowed for driverless cars to be created. Aside from image classification, it has also demonstrated its ability to effectively recognize speech with high accuracy.

In the case of both neural networks, RNN is used to refer to the same acronym. This is important to note, as the Recursive neural network can respond to both structured inputs and non-structured inputs. The concept of structured input and output processing is clearly absent here. A tree-like hierarchy is used to accomplish this. Deep learning networks that achieve deep learning goals, such as reinforcement learning, are built using reinforcement neural networks. These systems recognize patterns in the data that contains past information. We employ a Recursive Neural Network in order to analyze sentiment in sentences.

As a result of their knowledge of machine learning algorithms, anyone can understand how much potential this technology has. Recurrent Neural Networks (RNNs) are used in machine translation because they capture the future output of an input as a sequence of inputs is used. For example, the word “rundog” does not have any meaning if it has no context in the past. Machine translators are unable to make logical inferences with the input if it does not have RNNs.

What Is Recursive Neural Network Used For?

The Recursive Neural Network is used to analyze sentiment in natural language sentences. This is one of the most important tasks in Natural Language Processing (NLP), which is the process of determining the tone and sentiment of a specific sentence in writing.

The Power Of Recursive Neural Networks

Recursive neural networks are powerful in data representation and prediction due to their ability to mimic natural language. recursive data is information that is organized into structures that can be repeated, which is a useful feature for recursive data. Recursive neural networks are widely used to make tree structures and teach learning sequence.

What Is Difference Between Cnn And Rnn?

The primary distinction between a CNN and an RNN is that a CNN can process temporal information, which is information that comes in sequence, such as a sentence. Recurrent neural networks, on the other hand, are designed to handle temporal information, whereas convolutional neural networks are incapable of doing so.

The types of artificial neural networks used in machine learning vary depending on the task. Neural networks are used for a variety of purposes in contrast tovolutional neural networks, which are only used for a few. A neural network has a different structure depending on the specific use case. In this article, we will compare and contrast CNN and RNN using popular YouTube videos and visual aids. The filter convolves the pixels of the image before passing them on to the next layer of the CNN. When the CNN learns its filters, it constantly adjusts them. The filters are adjusted for a variety of factors that enable it to detect features and patterns in a photograph, such as edges, curves, textures, and more.

Recurrent neural networks are networks that are designed to interpret temporal or sequential data. To influence the output, they take in input and reusing the activations of previous nodes or later nodes in the sequence. An autocorrect system takes the word you typed and makes a prediction as to whether the word is correct or incorrect, using the words you typed as input. If you typed “network” but later realized that it was a mistake, the system takes in the activation functions of the previous letters “network” and the current letters “c.” The correct output is k, after which it spits out the last letter. RNNs could be used for spelling correction in this manner, but they would have to be well-defined.

CNNs are also capable of detecting patterns in images, as opposed to RNNs. A CNN, for example, has a better ability to recognize edges, shapes, and textures. CNN models, on the other hand, can be built with a variety of layers, whereas RNN models can only be built with a single layer.

Cognitive Capabilities Of Neural Networks

CNNs and RNNs perform better than traditional methods of image recognition and encoding.

What Is Rnn And How It Works?

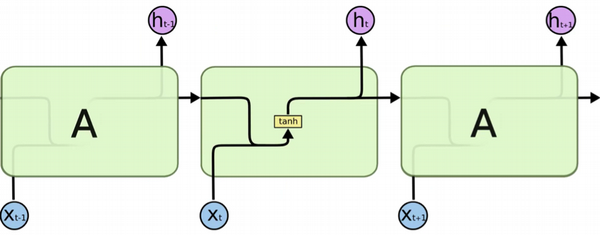

An RNN is a type of neural network where connections between nodes form a directed graph along a sequence. This allows it to exhibit temporal dynamic behavior. For example, its output can depend on both the inputs it receives and the states of the nodes.

We will learn more about recurrent neural networks (RNN) in this article. Deep learning models such as RNN can move information from one level to the next. Following that, data will be distributed to all neuron collections in the training phase, where the neuron points will be able to learn patterns. In some cases, ANN and neural networks are sufficient. Ann outperform popular machine learning models such as logistic regression, random forest, and support vector machine. We must work with sequences of data, such as text, time series, and so on. It does not work properly.

Recurrent Neural Networks (RNNs) are used here to solve this problem. The primary purpose of RNN is to process sequential data as quickly as possible. The ability of RNNs to recall specific information about an input is what makes them unique. This is critical in predicting outcomes in a more precise way. Recurrent neural networks are made up of three layers: an input layer, a hidden layer, and an output layer. As feed-forward neural networks consider only current inputs, they only consider the input. The only thing that appears to be familiar is the training procedures.

Each input is evaluated in the same way that it is evaluated in the preceding one. The RNN algorithm is used in machine translation, speech recognition, and conversational AI. When training an RNN, it is very similar to any other neural network you have ever come across. It has been an added bonus to use a backpropagation algorithm in the training. They are used in a variety of smart driving applications, including Google Assistant, Alexa from Amazon, Apple Siri, and smart home assistants from other manufacturers. RNNs are used to derive patterns from data sets, which is beneficial for a variety of tasks. Machine Translation allows us to automate a language translation task in a systematic manner.

Among the most common applications of sentiment analysis is that of natural language processing. There are two types of challenges for RNNs: advantages and disadvantages. When the inputs of a function are slightly modified, a gradient can be used to determine the magnitude of the change in output. The higher the gradient, the steeper the slope, which aids in the learning process. If the slope is zero, the model will stop learning until the slope returns to its original height. It can occur when the values assigned to a given model are too small. As a result, the computational model stops learning and devotes more time to processing in order to produce the result.

The memory extension function of Long Short-Term Memory (LSTM) is found in the short-term memory unit. The LSTM gates are divided into three types: input, forget, and output. An input gate determines whether or not to let new inputs into the system, whereas a forget gate deletes information that is not relevant. It is critical to note that RNNs are being upgraded to keep up with evolving trends.

RNNs are used in a variety of ways in speech recognition. When creating a pronunciation sequence for a word, a recurrent neural network can be used. This network is capable of learning from a large amount of speech data and then generating new pronunciations for new data. Another application of recurrent neural networks is text recognition. Recurrence neural networks can be used to recognize patterns in text streams. A large number of text data can be trained on this network and then used to identify patterns in new text data. A recurrent neural network is a powerful tool that enables text and data recognition. They can be used to generate new word pronunciations, detect patterns in text, and learn new words.

The Advantages Of Rnns

RNNs provide advantages over other algorithms in sequential data problems. RNNs’ ability to recall past inputs is one of their most significant advantages. Many machine learning problems, including speech recognition and text translation, are caused by sequential data.

RNNs can learn from data that is not linearly structured in addition to learning from data that is not linearly structured. This means that RNNs can learn to generalize from uncarose data that is not linearly separable, which is essential for problems like text recognition and machine translation.

RNNs, in addition to being quick and efficient, are useful for large-scale data collection. RNNs have the ability to solve a wide range of problems and can be used for a variety of purposes.

What Is Meant By Rnn?

An RNN is a type of artificial neural network where connections between nodes form a directed graph along a temporal sequence. This allows it to exhibit temporal dynamic behavior. Unlike traditional neural networks, which assume that all inputs are independent of each other, an RNN can maintain internal state by using its internal memory. This makes them well-suited for modeling time series or text data, where there is some type of dependence over the input sequence.

Recurrent Neural Networks (RNNs) are artificial neural networks that can process sequential data, recognize patterns, and predict final results. An RNN’s internal memory, which can be accessed by remembering or remembering information received from another source, is responsible for its ability to recall and remember. RNN is used by popular products such as Google’s voice search and Apple’s Siri to process user input. A RNN can be used to generate text based on deep learning models. It learns the likelihood of a word or character appearing by looking up the previous sequence of words or characters used in the text. The primary goal of speech recognition is to convert voice data into text, whereas the primary goal of voice recognition is to identify the user’s voice. Using a time series prediction model that has been trained from historical data, you can predict the future based on historical data. As a result, data insights can be used by investors to make more informed investment decisions. If you want to learn more about Recurrent Neural Networks, I recommend taking one of the following courses.

When used in speech recognition, a phonetic segment produced by the RNN is required. Phonemic segment analysis can be used to determine individual phonemes’ output probability and expected results. This information is extremely important because it enables the network to make more precise predictions.

It is also critical to examine the phonetic segment. A sentiment analysis is the process of identifying the emotional content of a given sentence. This information can be used to determine what is meant by a sentence.

The phonetic segment is important in a variety of ways. It aids in speech recognition because it enables the network to make accurate predictions. Furthermore, because it can be used to determine a sentence’s emotional content, sentiment analysis uses it in conjunction with it.

Recursive Neural Network Example

A recursive neural network (RNN) is a type of artificial neural network where connections between nodes form a directed graph along a sequence. This allows it to exhibit temporal dynamic behavior. Unlike feedforward neural networks, RNNs can use their internal state (memory) to process sequences of inputs. This makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition.

Recursive Neural Network Tutorial

A recursive neural network (RNN) is a type of artificial neural network where connections between units form a directed graph along a temporal sequence. This allows it to exhibit temporal dynamic behavior. Unlike a feedforward neural network, where connections between units do not form a cycle, a RNN can use its internal state (memory) to process sequences of inputs. This makes them applicable to tasks such as unsegmented, connected handwriting recognition, or speech recognition.

RNNs (recurrent neural networks), which are among the most popular machine learning algorithms, are one of the most popular. Some issues with feed-forward neural networks were discovered in RNNs, which were developed to address these issues. RNN is the solution to these problems. It can handle sequential data as well as current input and previous input data. feed-forward neural networks are those that allow data to flow only in one direction, from the input nodes to the hidden layers, and from the output nodes to the input nodes. Each hidden layer of a recurrent neural network is subject to the same parameters, such as activation functions, weights, and biases. Recurrent Neural Networks are classified into four types.

A recurrent neural network can be used to model data problems that are time-dependent or sequential in nature, such as stock market predictions, machine translation, and text generation. The problem of vanishing gradients is one of the reasons RNNs have problems. The most common causes of gradient problems are long training sessions, poor performance, and poor accuracy. LSTMs are able to solve this problem by using a Backpropagation algorithm to combine data from a neural network that has input time series. Long-Term Memory Networks (LSTMs) are a type of RNN that can learn long-term dependencies by remembering information from long periods of time. The repeating module on an LSTM has a slightly different structure than the chain-like structure on an LSTM. It is unusual for four layers of a neural network to communicate with one another.

Make a distinction between how parts of the cell state end up in the output. The third step is to determine what will be produced. If “John” were to be the best output after bravery, he would be the best option here. The input gate can be used to add some new data to a program. Purdue University and IBM are two other institutions that offer Post Graduate Programs in Artificial Intelligence and Machine Learning. TensorFlow is the open-source software library that is intended to be used for machine learning and deep neural network research, and it can be used to train yourself in deep learning techniques. Do you have any questions? Please let others know about it in the comments section of this tutorial.

Recursive Neural Networks: A Class Of Deep Neural Networks

Recursive neural networks (RvNNs), which are a subset of deep neural networks, are capable of learning complex data structures and graphs. You can obtain a structured prediction using RvNN if you recursively apply the same set of weights to structured inputs. In recursive, the neural network’s output is regarded as a result of its computation. Recursive neural networks use hierarchical structures, combining child representations into parent representations, whereas recurrent neural networks use linear time progressions, combining the previous and hidden time steps into the current time step.

Recursive Neural Network Python

A recursive neural network is a type of neural network that is able to learn recursive patterns. It is a powerful tool for learning from data, and has been successfully used for tasks such as natural language processing and computer vision.

Deep neural networks have allowed researchers to gain a better understanding of natural language. In many linguists’ opinion, language can be understood as a hierarchy of phrases. These models have a difficult time being implemented and inefficiently used. The PyTorch framework, which was released last year, makes it much easier to build and use these and other complex natural language processing models. After decoding each sentence into a fixed-length vector representation, researchers looked for relationships between sentences in SPINN. According to many linguists, the way language is understood by humans is through a process similar to that described by trees such as these. It differs from traditional deep learning frameworks such as Tensorflow and Theano, both of which use graphs to make computations.

With the passage of time, we can compute these gradient equations without having to put in any additional effort. A computation graph is a static object that can be built in many popular frameworks such as TensorFlow, Theano, and Keras. A graph like this works well for convolutional networks with a fixed structure. In more complex cases, you might want to build models that are affected by the output of subnetworks within the model. The PyTorch deep learning framework is the first defined-by-run framework that employs static graph frameworks like TensorFlow in a way that matches their capabilities and performance. This machine can be used to create convolutional networks, neural networks, or any other type of reinforcement learning. Python for loops is used to construct a neural network, and we’ll take a closer look at the SPINN implementation in this code review.

Python’s SPINN.forward method defines a forward pass in a model via the user-implemented method. In Python, this function employs only the stack-manipulation algorithm described above. The TreeLSTM is the composition function that combines the representations of each pair of left and right sub-phrases into the representation of the parent phrase. SpinN’s forward code, as well as its submodules, generate an extremely complex graph. It can be automatically backpropagated each time with very little overhead by simply calling loss.backward() from any point in a graph. The version described here has no compilation steps and requires a Tesla K40 GPU and a Quadro GP100 to train for approximately 13 hours. This framework is the first to include stochastic computation graphs as reinforcement learning components.

It’s as simple as adding it to your existing model and altering the main SPINN for loop. The entry barrier in this field is greatly reduced by using PyTorch. Deep Q Learning, a modern reinforcement learning model, is covered in the official tutorials, which last 60 minutes. Despite the fact that PyTorch has only been available for a short time, three research papers have already been published that utilize it in academic and industry labs.

Lstm Networks: A Powerful Tool For Modeling Long-term Dependencies

The RNN industry has been around for a while, but it is still relatively new. Sequences are frequently used in speech recognition, language translation, and other tasks that require time series modeling, so they are especially popular in this context. An LSTM network, also known as an LSTM network, is a newer neural network type. It’s especially useful to model long-term data dependencies. As a result, they can adjust to changes in a more timely manner. LSTM networks, in addition to overcoming the RNN’s vanishing gradient, can resolve long-term dependence issues. Gradient vanishing occurs when a neural network’s information is lost as connections recur over time. An LSTM solution is one that ignores useless information and data in a network while addressing a vanishing gradient. LSTM networks are powerful tools that can be used for a wide range of purposes. They excel at modeling long-term dependencies between data and processes. LSTM is a good choice if you want a neural network that can change with you over time.

Recursive Network

A recursive network is a type of computer network in which each node in the network can act as a server for other nodes in the network. This type of network is often used to provide high-speed Internet access to users.

Recursive Neural Networks: A Powerful Tool For Natural Language Processing

The reinforcement neural network is a powerful tool for natural language processing. They are computer programs that employ a neural network at all nodes. When a boundary segmentation is done using recursive neural tensor networks, you can determine which word groups are positive and which are negative.

Recursive Neural Network Vs Recurrent Neural Network

There is a debate between those who believe that recursive neural networks are better than recurrent neural networks, and vice versa. Some believe that recursive neural networks are more accurate because they can take into account the relationships between words in a sentence. Others believe that recurrent neural networks are more accurate because they can take into account the context of a sentence.

In this post, I will go over how Recurrent Neural Networks work. It is very simple to understand why the Recursive Neural Network is referred to as such. RNNs are intended to take sequential information and use it. If you want to predict the next word in a sentence, you must first determine which words preceded it. In comparison to current neural networks, recurrent neural networks perform the same task for every element of a sequence, with the output depending on previous computations. According to RNN theory, a RNN can read arbitrarily long sequences of information but only at a few steps. An RNN’s hidden state is its most important feature.