In order to understand the need for weight in neural networks, it is important to first understand what a neural network is. A neural network is a complex mathematical model that is used to simulate the workings of the brain. This model is made up of a series of interconnected processing nodes, or neurons, that each perform a simple mathematical operation. The output of each neuron is then passed to the next neuron in the network until the final output is produced. The weights in a neural network determine the strength of the connection between each neuron. These weights are assigned randomly when the network is first created and then adjusted as the network is trained. The purpose of training a neural network is to find the set of weights that will produce the desired output for a given input. The need for weight in neural networks arises from the fact that the output of a neuron is a function of the weights of the connections between the neurons. If the weights are not carefully chosen, the output of the neural network will be incorrect. Therefore, the need for weight in neural networks is to ensure that the output of the neural network is correct for the given input.

It has a structure of 4,5,3,2. It is estimated that the number of weights in hidden layer L2 is = (4 + 1) * 5 = 25, where 5 represents the number of neurons in L2, and the input variables are 4 in L1. Each of the input Xs will have five bias terms, and each of those terms can be stated as (4 + 1) = five.

Each neuron has three functions: biased, weight, and activation. The bias is the negative threshold that must be reached in order to fire the neuron. When selecting which input is more important to others, the weight of it determines its importance.

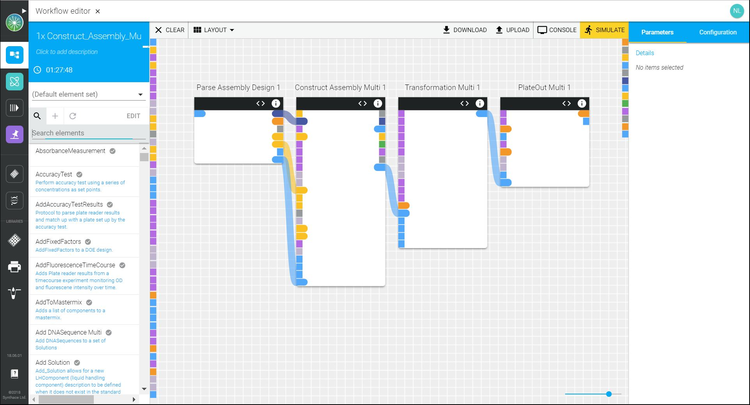

In Step 1, begin by creating weights and biases for the Neural Network. In step 2, forward propagation: using the given input X, weights W, and biases b for every layer, we compute a linear combination of inputs and weights (Z), followed by activation function in A.

These values can be used to calculate weights. It is especially important when you are propagating multiple iterations; connections that must be ‘heavy’ will become ‘heavier’. A wide range of examples can be found showcasing neural networks with weights of more than one.

Why Is Initialization Weight Necessary?

Initialization weight is necessary in order to ensure that the neural network converges on a solution that is close to the global minimum. If the weights are not initialized properly, the neural network may get stuck in a local minimum, which would prevent it from finding the optimal solution.

The Importance Of Weight Initialization In Neural Networks

One of the most important considerations when constructing a neural network model is the initialization of weights. A weighted sum of inputs is calculated by assigning weights to the nodes within neural networks. When the neurons are trained on the same weights, they are instructed to begin with zeros. If all the weights are initialized with 0, the derivative with respect to loss function has the same value for each w in W[l], implying that all weights have the same value in subsequent iterations. Because initializing weight with much larger or smaller values can slow down optimization, it is recommended that you do so as soon as possible. Bilateral symmetry can be broken by randomly initialization.

Why Do The Weights For A Neural Network Need To Be Small When Initially Initialized?

To initialize the weights of artificial neural networks, they must be set to a small number of random variables. This is due to an expectation of the stochastic optimization algorithm used to train the model, which is known as.

Initialization Strategies For Deep Neural Networks

initialization strategies for deep neural networks vary. Some processes require small random numbers, while others require heuristics that consider information such as the type of activation function used and the number of inputs to the nodes. If you initialize the weights of a neural network to zero, the derivatives of each w in W[l] will remain constant. Because of this, networks may fail to break symmetry, which is a problem.

What Is Weights In Neural Network

What is a Neural Network? How does it work? The weight of a neural network’s input data is the parameter that transforms it into output data within the network’s hidden layers. It is a collection of nodes, or neurons, which are what make a neural network a neural network. Each node contains a set of inputs, weights, and bias values.

initialization is critical in this case. When you initialize the weights, you specify which features of the data to focus on. As a result, it is critical to randomly initialize the weights instead of using symmetry to do so.

If all layers’ weights are the same, the layer’s output will be the same as the input. Because neural networks are unable to implement symmetric signals, they are unable to solve symmetric computing problems.

In order for a neural network to learn, the weights must be initialized randomly with different values. If all of the layers in a layer are the same, the network will be able to reproduce all of the input. To solve this issue, neural networks must deal with symmetry.

In a random initialization, a layer’s output differs from the input’s. This is important because it enables the network to distinguish between different types of data.

What Are Weights And Bias In Neural Network?

The weight of one neuron influences the strength (or signal) of the other. The weight of an input determines how much influence it has on an output. There are constant biases, which are created by adding an input to the next layer.

Why Are Weights Needed In Neural Networks?

The weight of a unit represents the strength of its connection with another unit. In this case, the weight of node 1 to node 2 has a greater magnitude than the weight of node 2. The input value has less significance as a weight.

How Weights Are Calculated In Neural Networks

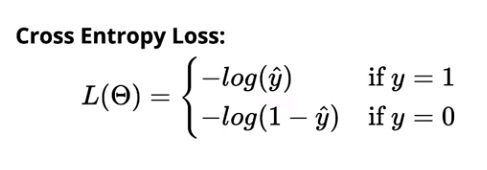

Weights in neural networks are calculated by first initializing the weights to small random values. The initial weights are then adjusted during the training process by using a technique called backpropagation. Backpropagation involves adjusting the weights in such a way that the error is minimized.

How To Calculate Weights In Neural Networks

Weight calculation in neural networks, which is a complex and important task, is one of the most important aspects of neural networks. The weights are real values that correspond to each input/feature, and they represent the importance of that corresponding feature in predicting the final output. In order to predict the outcome, weights are calculated based on the input/feature used. The weight of the input/feature determines its importance. This method is used to calculate the weight. Using the gradient descent algorithm, we can determine which input/feature is the best weight in the network. The best weight for determining the most probable outcome is the weight that the final product will be based on.

What Are Weights In Machine Learning

Weights are the parameters of a model that are learned during training. They represent the strength of the connections between the neurons in the network.

The Importance Of Weights In Machine Learning

We must find a function to interpret input data points to output values when designing a model. It will be used in the same way by each training example, but it will vary depending on how it is used for the first time. In other words, we can define the function as a line between the training examples and the correct predictions. It is better to have a model that can predict training examples closer to the line. As a result, when the weight of a training example is taken into account, how much influence that example has on the prediction for the next one will differ. If the weight is very small, the next example will have a significant influence, whereas if the weight is very large, the next example will have no influence at all. A model is trained based on a weight that places the line between its training examples and its predictions as close as possible to the training examples. In practice, you measure the distance between a training model and the model by adjusting the weight of each training example until the line is as close to the model as possible. For a variety of reasons, a machine learning weight is required. The first point to be made is that weights indicate how important a feature is in predicting a target value. The weight of the temperature in Phoenix, Arizona, rather than the weight of the temperature in Los Angeles, California, will be the most important factor when calculating temperature in Phoenix. Second, because weights affect the orientation or slope of the line that separates two or more classes of data points, weights also influence the orientation or slope of the line. A model that tries to predict gender by using male data points as its starting point will orient the line toward male data points more than female data points. The model will be able to make more accurate gender predictions as a result.

What Are Weights And Biases In Neural Network

Weights and biases are the parameters of a neural network. The weights are the connections between the neurons and the biases are the thresholds for the activation of the neurons.

Bias In Neural Networks

The bias in neural networks varies according to the types of neural networks used. The input bias is the bias that is added to the input layer of a neuron. The hidden layer bias is an added layer bias that is added to neurons in the hidden layer. It is a bias that is added to neurons in the output layer. The bias in the final layer (squashing layer) is the bias added to neurons. Every neuron in the hidden layer, as well as every neuron in the output layer, is usually fitted with bias. The bias terms have weights, and you usually add bias to all the neuron layers hidden in the output layer and the neurons hidden in the hidden layers.