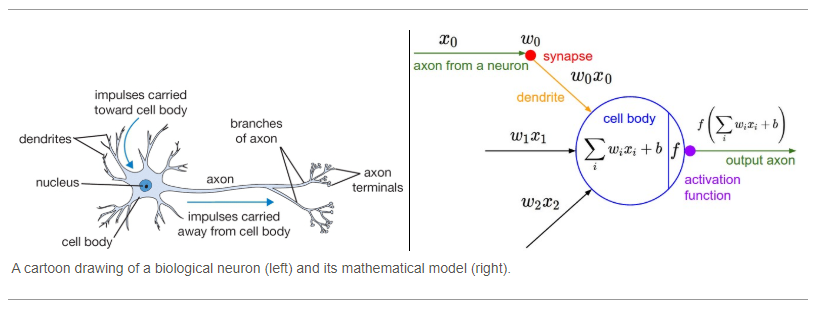

A neural network is a system of interconnected neurons that process information by responding to external stimuli and relaying information to other neurons. The activity of a neural network is constantly changing in response to the inputs it receives. The changes in the activity of a neural network can be either short-term or long-term. Short-term changes, also known as synaptic plasticity, involve the strengthening or weakening of the connections between neurons. Long-term changes, also known as Hebbian plasticity, involve the growth or loss of connections between neurons.

What Is The Activation Of A Neural Network?

What is neural network activation? The Activation Function of a neuron determines whether or not it should be activated. It will compute whether or not the input from the neuron is critical in the prediction process using simple mathematical operations.

An artificial neural network is made up of an activation function. A non-linear transformation occurs on the input before sending it to the next layer of neurons or finishing the output, in which case the neurons are chosen to be activated or not activated. A neural network can also be hampered by activation functions, which can obstruct its ability to converge and achieve convergence speed. The activation of logistical processes. An eccentric hyperbolic range (arctanh) between the orbital and inverse hyperbolic ranges. The softmax function is frequently referred to as the soft argmax function, or a multiclass logistic regression function. A Gudermannian function is a hyperbolic function or a circular function that is not explicitly denoted by complex numbers.

A transformer activation system is used in many previous Transformers such as Google’s BERT and OpenAI’s GPT-2. As a result, the weights and biases of the initial layers will not be effectively updated with each training session. Because of large error gradient distribution, training neural network model weights with very large updates is necessary. When creating multilayer perceptron and convolutional neural networks, a rectified linear activation is required. A linear unit that can combine the good components of both leaky and functional ReLUs is called an exponential linear unit (ELU). A negative value in ELU results in a lower computational complexity and a closer mean unit activation than a batch normalization. Google’s Swish function outperformed the ReLU function on performance and computational efficiency.

The Importance Of Activation Functions In Neural Networks

A node’s activation function determines whether or not it will output an output in a neural network. The output will be generated when a node’s input is present, regardless of its activation function; for nodes with an activation function of 0, the output will not be generated.

What Is Neural Network Answer?

A neural network is a computer system that is modeled after the brain. It is composed of a large number of connected processing nodes, or neurons, that work together to solve complex problems. Neural networks are similar to other machine learning algorithms, but they are composed of a much larger number of parameters and are more difficult to train.

Neural networks, which are based on artificial intelligence, have become popular in the development of trading systems. A neural network is a collection of algorithms that try to recognize relationships in a set of data by imitating the way the human brain processes data. A significant amount of the work is being done directly in neural networks. The Deep Blue chess computer, developed by IBM and widely regarded as the best chess game ever, is credited with paving the way for the advancement of computer systems capable of handling complex calculations. Convolutional neural networks have a variety of layers that divide data into categories. Neural networks like this are used for image processing as well as image analysis. A modular neural network’s functions are coordinated by a number of independent networks.

A number of these procedures are used to increase the efficiency of complex, intricate computing operations. Neural networks can detect trends, analyze outcomes, and predict future asset market movements, among other things. They are programmed to learn from prior outputs to predict future outcomes based on the similarity between previous inputs. Neural networks, in addition to being more efficient than humans or simpler analytical models, are constantly expanding. Artificial intelligence is based on neural networks, which are complex, integrated systems capable of performing analytics much more effectively and at a higher level than humans can. Neural networks are used to analyze transaction history, predict asset movement, and forecast financial market outcomes in finance. Neural networks are classified into a variety of types depending on the purposes and the goals they serve.

One of the most useful applications of neural networks is machine learning and pattern recognition. The goal of neural networks is to train neurons to recognize patterns by connecting them to a large number of interconnected nodes. The nodes must decide what to do based on the data they receive. Following that, the decision is passed on to the next neuron in the network, which can in turn pass on the decision to the next node, and so on. The multilayer perceptron is the most widely used and successful neural network in its class. A network of interconnected nodes, or neurons, is responsible for learning to recognize patterns. The multilevel perceptron can be used in a variety of ways, and it is an extremely versatile neural network. Its ability to recognize patterns is especially useful for image recognition, speech recognition, and text recognition, and it can be used in a variety of tasks. The advantages of using neural networks over traditional methods are numerous. One advantage of neural networks is that they learn faster and generalize learned information more easily. Furthermore, neural networks can handle problems that traditional methods of handling data may not be able to handle, such as recognizing patterns in large amounts of data. The issue of neural networks is not without precedent. One of the challenges neural networks face is that they are unable to identify all types of patterns at the same time. The data used to train neural networks may also contain errors. However, neural networks are more likely to be able to solve the majority of problems if they have the right set of data and some training.

What Changes As A Neural Network Learns?

As a neural network learns, it changes the weights of the connections between the neurons in order to better model the data it is being given. This can be thought of as the network adjusting its parameters in order to better fit the data.

Neurons are made up of computational units (neurons), each of which is linked to one another by a network of connections. A network can transform data until it is capable of categorizing it as an output, such as when it renames an image or tags unstructured text. Michael Skirpan’s neuron-level animation depicts how a network learns. Neural networks are complex systems with emergent behavior. Neuron interactions rather than neurons themselves enable the network to learn. As you can see in the visualization, a neural network learns to find the right answer on its own by tuning itself to find the correct answer. In the same way that a brain accepts and processes new information, it determines the correct response, and it reflects on mistakes to improve performance in the future. The extent of intelligence derived from neural networks is unknown, but we must learn how learning actually allows us to refine our visions of what’s possible.

What Is The Process Of Neural Network?

The data transformation takes place in the network using a series of computations: each neuron multiplied an initial value by some weight, sums the results, adjusts the resulting number by the neuron’s bias, and then normalizes the output using an activation function.

Neural networks can be discussed in depth in machine learning, allowing us to get to the heart of machine learning. Except for the inputs and outputs, there is no fundamental principle in a neural network. They reduce the loss function until the model is completely accurate. For example, a handwriting analysis that is 99% accurate could be performed. A perceptron takes binary inputs and outputs a binary output from an object. In this case, the outcome should be yes (1) or no (0) based on a weighted sum and a threshold. Handwriting and facial recognition are similar in that neural networks can generate a series of binary decisions in the same manner.

In a neural network, the input initial values and output are not the only intermediate steps; hidden layers are also used. When machine learning adjusts the weights and biases, the formula becomes most accurate in determining the correct value. In artificial intelligence, we use the weights x1, x2, x3,…, xn, and bias B to train the neural network. As a result, we change the inputs in order to minimize the loss function. A loss function is essentially a measurement of the difference between what was predicted and what actually was. The stochastic gradient descent is a method for determining the point for a loss function with a large number of input variables. MNIST collects handwriting samples from 250 people for its training set. The neural network detects which pixels are filled in by comparing the dark and light colors of each pixel. The sample of handwriting that corresponds to the number 0 is also present.

It is a subset of machine learning, which is a branch of artificial intelligence dedicated to teaching computers to recognize patterns from large amounts of data. Neural networks are made up of thousands of interconnected processing nodes, or neurons, that can be trained to process data.

The neural networks that were designed in the first place were originally intended to mimic the brain’s function. Neural networks now perform a wide range of tasks, including financial operations, enterprise planning, trading, business analytics, and product maintenance.

With their ability to solve a wide range of problems, neural networks are a powerful tool. Their ability to quickly make complex decisions and to learn how to process data makes them excellent candidates for this field.

Neural networks are an excellent tool that can be used to solve a wide range of problems.

How Neural Network Works Explain With An Example?

Neural networks are similar to the human brain in every way. When the brain is processing handwriting or facial recognition, there are a lot of quick decisions to make. In a situation where it is a gender question, for example, the brain may begin by asking, “Is it female or male?” Does it come black or white?

The Different Types Of Learning Algorithms

Learning with supervised supervision involves giving the teacher the correct answer to the problem and the student attempting to locate it. Students participate in this learning process by receiving a set of training data and having to identify the correct answer. A supervised learning algorithm is typically based on the Bayesian network.

A student is taught a set of training data but does not know which answer to choose when unsupervised learning occurs. A student must study the data in order to find the correct answer. The Naive Bayes algorithm is most commonly used unsupervised learning algorithm.

Students are rewarded for correctly answering questions as part of reinforcement learning. Students must learn how to find the right answer by searching for reward items. Reinforcement learning algorithms, including Q-learning, are most commonly used.

Neural Network Example

A neural network is a machine learning algorithm that is used to model complex patterns in data. Neural networks are similar to other machine learning algorithms, but they are composed of a large number of interconnected processing nodes, or neurons, that can learn to recognize patterns of input data.

Neural networks are often used for tasks such as image recognition and classification, pattern recognition, sequence prediction, and forecasting.

An artificial neural network (ANN) is made up of hardware and/or software that is patterned after the operation of neurons in the human brain. Deep learning is a type of artificial intelligence that includes deep learning technologies. Solutions to complex signal processing and pattern recognition issues are typically the primary commercial applications of these technologies. Because artificial neural networks can adapt to change, it is a sign of their adaptability. They adapt to their surroundings as they learn from initial training and subsequent runs. The primary goal of the learning model is to maximize input streams’ weight. The use of gradient training, fuzzy logic, genetic algorithms, and Bayesian methods are all examples of these types of approaches.

In addition to their depth, neural networks can be described in terms of the number of layers that connect input and output. This is why the term neural network is almost always used in conjunction with deep learning. The number of hidden nodes in a model or the number of inputs and outputs per node can be used to estimate the value of hidden nodes.

Activation Function In Neural Network

An activation function is a mathematical function that is used to determine the output of a neural network. The function is used to map the input values to the output values. There are various activation functions that can be used, but the most common ones are the sigmoid function, the tanh function, and the ReLU function.

Convolution Neural Network (CNN) models are complex because they are built with such complex architecture. In order for CNNs to be successful, they must be properly activated. An activation function can be linear or non-linear and is used to control neural network outputs. They are being used in a variety of domains, including object recognition, classification, and speech recognition. The sigmoid function is used to generate the probability-based output layer of the DNN, and its output layers are made up of multiple components. Sharp damp gradient during backpropagation, gradient saturation, slow convergence, and nonzero centered output are the most significant drawbacks. hyperbolic tangent functions have a range of -1 to 1.

As an alternative to ReLU, exponential linear units (ELUs) are useful because they reduce bias shifts by lowering mean activation during training. Swish Function is one of the first compounds created by combining sigmoid and input functions. This function does not suffer from vanishing gradient issues. = (x) *sigmoid(x):x/(1+e*(-x).

Why The Softmax Function Is The Second Most Used Activation Function

The solution avoids the vanishing gradient problem and makes computation more efficient.

The softmax function, which is the world’s second most commonly used activation function, is also known as the Softmax function. The output is generated by using this method in the final layer of a neural network.

A probabilistic function is used to generate a probability for each potential value for a neuron.

What Is A Neural Network In The Brain

A neural network is a group of interconnected neurons that transmit signals between each other. The brain is made up of many neural networks that allow it to process and store information.

Deep neural networks, according to the human brain, serve a variety of functions. We look at something and our brain fires off a series of neurons, which generates thousands to millions of’reference images.’ Because it is simply processing information from past experiences or animal reference information, it does not involve any programming or special skills. In 1943, a group of neuroscientists attempted to recreate the biological functions of neurons using artificial neural networks. Researchers discovered a formula in 2006 that worked well for the above tasks. Neural networks were re-discovered around the same time as cloud computing, heavy-duty GPU processors, and big data became more popular. The neural network is at the heart of cutting-edge artificial intelligence (AI) technology.

Deep neural networks have been created after decades of research. The intelligence of these machines can compete with and even surpass the capabilities of the human brain in a variety of tasks. What are neural networks and how are they better or worse than conventional approaches? How do you calculate a salary? Artificial intelligence, at its most basic, was designed to assist human brain function. Artificial neural networks have been directly inspired by human neural networks. The human brain’s neurons can give a continuous series of outputs almost indefinitely, but AI-powered neural networks can only produce a binary output per second, so a few tens of millivolts per second is required.

Neurons are arranged in a column on top of one another to form neural networks. The most difficult tasks, such as speech recognition and image classification, can be performed by an artificial neutral neural network (ANN). There is a significant distinction between the brain and a neural network: an artificial neural network will produce the same output but the human brain may struggle. Long-term memory networks, such as LSTM networks, are some of the most advanced artificial neural networks. These new capabilities allow for the connection of very distant and recent neurones as well as smart and sophisticated connections. Depending on the context, LSTMs can be used to generate search results, generate text, translate text, perform machine translation, and perform a variety of predictive tasks. Artificial neural networks, in order to be able to identify something, must first collect thousands of reference points or data points.

The human brain, on the other hand, can process information in a matter of seconds. Artificial neural networks, like human neural networks, can’t generate knowledge in the form of symbols. It is nearly impossible to examine or uncover how a particular neural net input results in an output in any way other than in a clear, meaningful, or explainable manner, just as it is impossible to visualize how the human mind makes decisions. Many researchers are beginning to discover how artificial neurons learn in a completely different and distinct way than what happens in the human brain.

Neural Networks: Not A Direct Replica Of The Brain

A neural network is similar to artificial neural networks because it consists of artificial neurons that communicate with each other to solve problems. Neural networks, on the other hand, are not directly related to the brain. In contrast to the brain, which is capable of learning and generalize, the human brain is designed to solve a specific problem.

Basic Neural Networks

A neural network is a machine learning algorithm that is used to model complex patterns in data. Neural networks are similar to other machine learning algorithms, but they are composed of a large number of interconnected processing nodes, or neurons, that can learn to recognize patterns of input data.