Pytorch is a deep learning framework that implements a dynamic computational graph. It allows for easy and efficient computation of gradient descent for large, complicated models. Windows Subsystem for Linux (WSL) is a compatibility layer for running Linux binary executables (in ELF format) natively on Windows 10. Microsoft announced WSL in 2016, and it has been available in Windows 10 since the Anniversary Update in August 2016. WSL provides a Linux-compatible kernel interface developed by Microsoft, allowing users to install unmodified Linux distributions. Pytorch can be used on Windows 10 systems by installing a Linux distribution that is compatible with WSL. Once a Linux distribution is installed, the user can install Pytorch by following the instructions on the Pytorch website. After Pytorch is installed, the user can run Pytorch programs on their Windows 10 system by using the Linux distribution’s command line interface. Microsoft provides support for running Ubuntu, Fedora, and openSUSE distributions on WSL. These are the only officially supported Linux distributions, but other distributions may also work. Users can install a distribution by following the instructions on the Microsoft website. After a distribution is installed, the user can install Pytorch by following the instructions on the Pytorch website. The Windows Subsystem for Linux allows users to install and run a full Linux environment on their Windows 10 systems. This provides users with the ability to run Linux applications and tools on their Windows systems. WSL provides a Linux-compatible kernel interface developed by Microsoft, allowing users to install unmodified Linux

By following the steps in this article, you will be able to install PyTorch on your Linux system. Ubuntu 20 LTS will be the preferred operating system for our computers, as will any other. It is critical to first determine whether you are using Python’s most recent version or not. If you haven’t already installed it, the steps below will show you how to use it.

Does Pytorch Work In Wsl?

Pytorch works in wsl by allowing users to create and use deep learning models on wsl. This is made possible by the fact that pytorch uses the CUDA toolkit to enable wsl users to create and use models on wsl.

Pytorch On Windows: Python 3.x Only

PyTorch, a deep learning library, is available for use on Windows. PyTorch and DirectML are built into the native Windows Subsystem for Linux (WSL) and are available as an option for Windows 11 users. To check your build version, use the Run command (Windows logo key R). PyTorch is currently only available on Windows. Python 2 is a programming language that does not require extensive programming knowledge. It is not supported by x. The Python and Anaconda versions must be verified during the installation process. If you don’t want to use a torch, you can use it on a computer. To install the torch, use Cuda() on a tensor. The following is a list of all C packages available.

Can You Use Cuda In Wsl?

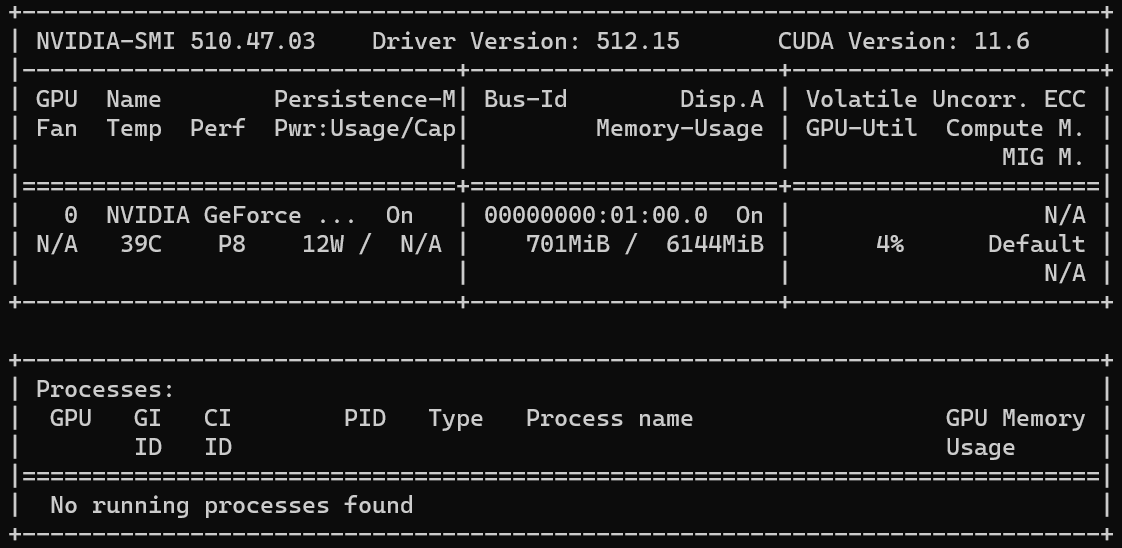

Despite this, the WSL2 environment fully supports CUDA application development, allowing users to create new applications using the most recent version of the CUDA Toolkit for x86 Linux. After Windows NVIDIA GPU drivers are installed on the system, CUDA is made available for WSL 2.

The Windows Subsystem for Linux (WSL) is a feature of Windows that allows users to run native Linux applications, containers, and command-line tools directly from their operating systems, such as Windows 11 or later. Developers can implement NVIDIA CUDA support for data science, machine learning, and inference in Windows using the Windows WSL 2 operating system. It is the WSL 2 driver that enables GPU acceleration between Windows and Linux. This document provides a workflow for getting started with CUDA running on Linux WSL 2. To run new CUDA applications, a CUDA Toolkit for Linux x86 is required. Existing applications can be unmodified within the WSL environment by using the driver with CUDA support. When Windows NVIDIA GPU drivers are installed on the system, the WSL 2 version of CUDA becomes available.

Because the default CUDA Toolkit comes packaged with a driver, one should be extremely cautious here. By following the steps below, you can install only the CUDA toolkit on WSL. In this guide, we’ll go over how to test and troubleshoot NVIDIA’s most recent version of its driver and container toolkit. To ensure that the correct versions of the driver and toolkit are installed, check the known issues and changelog sections. Contact NVIDIA’s product and engineering teams on the WSL 2 Developer Forum if you need help with CUDA. Traditional virtualization solutions necessitate the installation and configuration of virtualization management software for each guest virtual machine. Unlike traditional virtual machines, WSL 2 is a virtual machine that can be configured with the host operating system provider’s tools and is relatively light. Containers are better suited for container development because they are simpler to create and maintain than a virtual machine because containers provide application composability without the overhead of a virtual machine.

Running Ml Tools On Wsl

With the wide variety of distribution and tooling options available on WSL, running popular ML tools is simple. We’ll show you how to install TensorFlow and Keras in this post, two of the most popular ML libraries. To begin, you’ll need a GPU that can support CUDA. You can expect to have the appropriate version of WSL installed because it supports a wide range of distributions and toolchains. If you are unsure, you can try a supported version of the application. You can begin training models using TensorFlow after you have installed the necessary software. In order to accomplish this, you must first create a TensorFlow session and connect to your GPU. By running the following command, you can accomplish this. tfcreate_session (…) You can begin training your models by typing the following command into your session command line. tf.train(…) The Keras program is an excellent alternative. You will need to first set up a Keras session and then connect to your GPU. By running *br>, you can accomplish this. The keras.init (…) function can be used in this manner. After you’ve set up your session, you can begin training your models by using the following command: *br>. What is keras.train?

Is Wsl Good For Machine Learning?

Machine learning (ML) is becoming an essential component of many development processes. The Windows Subsystem for Linux (WSL) is ideal for data scientists, ML engineers, and anyone who wants to get started with ML and learn how to use GPU accelerated ML tools.

The WSL 2 tool allows Windows users to use the full Linux experience. This system, which is based on a lightweight utility virtual machine (VM), provides a high level of integration between Windows and Linux. Microsoft announced new features for WSL 2 in May 2020. Graphics processing units can be accelerated with support for Nvidia CUDA and DirectML, for example. By running:: WSL 2 Linux kernel version 2, you can determine if it is correct. If it is 4.19121, it will be a score of 41. The CUDA Toolkit should be installed. You must first install Ubuntu in Linux WSL and configure the CUDA network repository. Now you can use TensorFlow and CUDA on Ubuntu to build a machine learning development environment for your next machine learning project.

Running a complete Linux distribution on your desktop is an excellent way to get complete access to the Linux operating system‘s vast library of tools and applications. Aside from using all of the standard Windows applications, you can also use the vast number of Linux tools available. It is an excellent choice for developers who want to use the best tools available for their work.

Should I Use Wsl For Development?

Because WSL is designed for use with Bash and Linux-first tools (such as Ruby and Python), it assists web developers and those who use those tools in maintaining consistency between development and production environments.

The Newest Version Of Wsl2 Is Much More Improved And Efficient

Microsoft, on the other hand, has released a new version of WSL2 as part of the Windows 10 Fall Creators Update, which should improve performance. As a result, if you are using WSL1 or WSL2, you may want to consider dual booting your computer with Windows.

What Is The Benefit Of Using Wsl?

Why don’t I use Linux in a virtual machine? WSL requires less resources (CPU, memory, and storage) than a full virtual machine. You can run Linux command-line tools and applications alongside your Windows command-line, desktop, and store apps, as well as access Windows files through WSL.

The Pros And Cons Of Wsl And Cygwin

Although both WSL and Cygwin have advantages and disadvantages, many people believe WSL is the better option. With Cygwin, developers can still take advantage of the convenience without having to go through the hassle of managing their own development processes. If you want to use a reliable Linux container engine for Windows, WSL is your best bet.

Install Pytorch

Pytorch is a free and open source machine learning library for Python, based on Torch, used for applications such as natural language processing. It can be installed via pip.

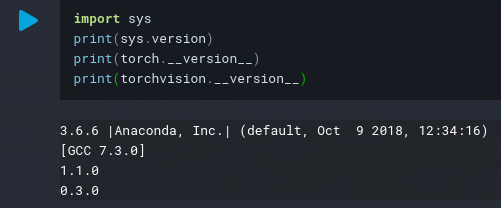

When installing the application, you must choose which preference you want and then run the install command. Stable contains the most recent and tested versions of PyTorch, and is suitable for a wide range of users. If you don’t want to install the most recent 1.1 builds but want to test them and support them, go for Preview (Nightly). Because it installs all dependencies, we recommend using the Anaconda package manager. This tutorial covers all of the steps required to install PyTorch using the Anaconda and Conda operating systems. In order to install Conda, you must follow the steps below. In the same way that Windows can be installed using Conda, Linux can be installed using it.

You must then run the provided command on your command prompt in order to test the operation. To begin, you must first use the wget command. The second step is to generate anaconda.info copies on your terminal and run them. After you’ve downloaded, you’ll need a few seconds to begin. When the download is complete, you will need to return to your home directory. The next step is to install Anacondas on your system.

Torchvision 0.2.1 Installing Pytorch

Install pytorch. If PyTorch is not already installed, use the following command after the installation is complete.

*br> - pytorch. If everything goes well, you should be able to see the following output:

Python version 2.70 (default) is currently available. Linux (Ubuntu 8.14 LTS, Red Hat 8.2.1 20180923) will install and use the followingGCC: *br. Fill out the information form with words like help, copyright, credit, or license.

Pytorch-directml

Pytorch-DirectML is a library for interfacing with the DirectML API. It allows users to train models using the DirectML API, and then use those models within Pytorch.

DirectML is a protocol that PyTorch uses to accelerate hardware on Windows 10 and Linux. With PyTorch, the development of neural networks can be accomplished by replaying a tape recorder. The reverse-mode auto-differentiation technique allows you to arbitrarily change the behavior of your network without incurring any lag or overhead. PyTorch has been designed with a linear thought process in mind, making it simple and intuitive to use. We use acceleration libraries like Intel MKL and NVIDIA (cuDNN, NCCL) to get the most out of each system. PyTorch’s Tensor API is simple to use, and it makes it simple to write new neural network modules or interface with PyTorch’s Tensor API. Python 3.6.2 or later, as well as a C++14 compiler, are required for the installation to run.

It’s also a good idea to install a version of Anaconda. For instructions on how to install PyTorch on NVIDIA’s Jetson Nano platforms (Jetson Nano, TX1, TX2, AGX Xavier), go to this link. It is recommended that you upgrade to Visual Studio 2019 version 16. 7.6 (MSVC toolchain version 14.27) or higher. Many programs require an additional library in addition to Magma, oneDNN, MKLDNN, or DNNL. You can create an image yourself or obtain a pre-built one from Docker Hub. In PyTorch, the shared memory segment size that container runs with is insufficient for processing, so if torch multiprocessing is used (for example, loading multithreaded data), the shared memory segment size must be larger.

If you add Python_VERSION to your query, this will be executed. The x.y make variable can be used to determine the Python version Miniconda will use. The readthedocs theme must be installed with Sphinx in order to create documentation. The PyTorch project is a community-driven effort led by some of the best engineers and researchers. If you want to contribute new features, utility functions, or extensions to the core, please first contact us and let us know what you want to contribute. There is a chance that if you do not discuss the PR with us before sending it, we will reject it because we are taking the core in a different direction than you are aware of.

Pytorch With Directml Speeds Up Training And Inference Of Machine Learning Models

With PyTorch with DirectML, an array of DirectX 12-compatible hardware allows users to train and evaluate complex machine learning models. PyTorch on DirectML provides access to the most recent versions of Windows 10 and Windows Subsystem for Linux in order to train and inference models. PyTorch can be downloaded as a PyPI package from DirectML. The Open Neural Network Exchange (ONNX) format is used by Windows Machine Learning to generate models. You can train your own model or download a pre-trained model. More information can be found on the Get ONNX models page for Windows ML.

Wsl Pytorch Gpu

PyTorch is a Python-based scientific computing package for Deep Learning. It uses the power of Graphics Processing Units (GPUs) to speed up the process of training deep neural networks. PyTorch can be used on Windows 10 via the Windows Subsystem for Linux (WSL). This allows users to install PyTorch and use it to train their models on a GPU.

Nvidia Gpu Acceleration Now Available On Windows Subsystem For Linux

Developers can use WSL 2 to take advantage of NVIDIA GPU accelerated computing technology for data science, machine learning, and inference on Windows. Python’s Tensor Transfer API allows you to transfer CPU Tensor data to the GPU without needing to install any libraries. As a result, the new tensors are identical to the existing tensors on the same device. In the same way, the model and logic are similar. To put it another way, data and a model are required to be transferred to the GPU. Follow these steps to install the NVIDIA driver on WSL. You must first install the GPU driver. Install the NVIDIA CUDA enabled driver for WSL on your existing CUDA ML workflows using the WSL driver. Get to the Core of How to Use CUDA on WSL 2 with a Driver for You.