Big data is the answer to how giants like Google, Amazon, and Tesla stay ahead in the competitive business landscape. Can data really be the game-changer for companies, propelling them into unparalleled success?

Simply put, it is. The integration of big data has transformed how businesses operate, make decisions, and innovate. Big data is driving the growth of some of the world’s most influential businesses, such as personalized recommendations, autonomous driving, and so on. Join us on a journey as we decode the strategies of ten industry leaders who are using big data to shape the future.

Take a peek into the data-driven realm where insights pave the way for unprecedented achievements, as we examine how Google optimizes searches, Amazon transforms e-commerce, and Tesla reimagines automobiles. We’ll look at the intricate web of algorithms, analytics, and innovations that make these companies pioneers in the big data era.

Company 1: Google

In the intricate landscape of data analytics, Google stands as a paragon of innovation, strategically wielding big data to redefine the digital experience. At the heart of Google’s prowess lies its ingenious utilization of big data in the very fabric of its existence, from the omnipresent search algorithms to the intricacies of targeted advertising and nuanced user behavior analysis.

Exploring the Algorithms that Power the Web:

Google’s search algorithms, the heartbeat of the internet, are a testament to the transformative capabilities of big data. With every search query, Google dynamically sifts through colossal datasets, leveraging machine learning and predictive analytics to deliver results that are not just relevant but eerily tailored to individual preferences. The search giant has mastered the art of understanding user intent, constantly refining its algorithms to ensure unparalleled accuracy and speed. This intricate dance with data has propelled Google Search to the zenith of the digital realm, making it the go-to platform for information retrieval globally.

Advertising Precision Unleashed:

When it comes to advertising, Google’s utilization of big data is nothing short of revolutionary. Through sophisticated algorithms and machine learning models, Google Ads doesn’t just display ads; it crafts personalized experiences for users based on their preferences, behaviors, and online interactions. Advertisers benefit from this precision targeting, reaching audiences with unprecedented relevance. The amalgamation of big data with advertising is a testament to Google’s commitment to delivering value not only to users but also to businesses aiming for the utmost impact in the digital marketplace.

Decoding User Behavior with Finesse:

Understanding user behavior is at the core of Google’s mission, and big data is the lens through which this understanding is achieved. Analyzing massive datasets generated by users across Google’s ecosystem – from search queries to interactions with various products – provides profound insights. This data-driven approach enables Google to anticipate user needs, enhance user experiences, and tailor its offerings with a level of customization that defines the forefront of technological innovation.

Projects and Tools:

Delving into specific projects and tools developed by Google showcases the company’s commitment to pushing the boundaries of what is achievable with big data.

-

Google Analytics:

- Google Analytics stands tall as a quintessential tool that empowers businesses to decipher user interactions with their websites. From traffic sources to user demographics, Google Analytics mines extensive datasets to provide actionable insights, enabling businesses to refine their online strategies.

-

TensorFlow:

- An open-source machine learning library, TensorFlow exemplifies Google’s dedication to advancing artificial intelligence. By leveraging big data to train intricate neural networks, TensorFlow has become a cornerstone for developers and researchers venturing into the realms of machine learning and deep learning.

-

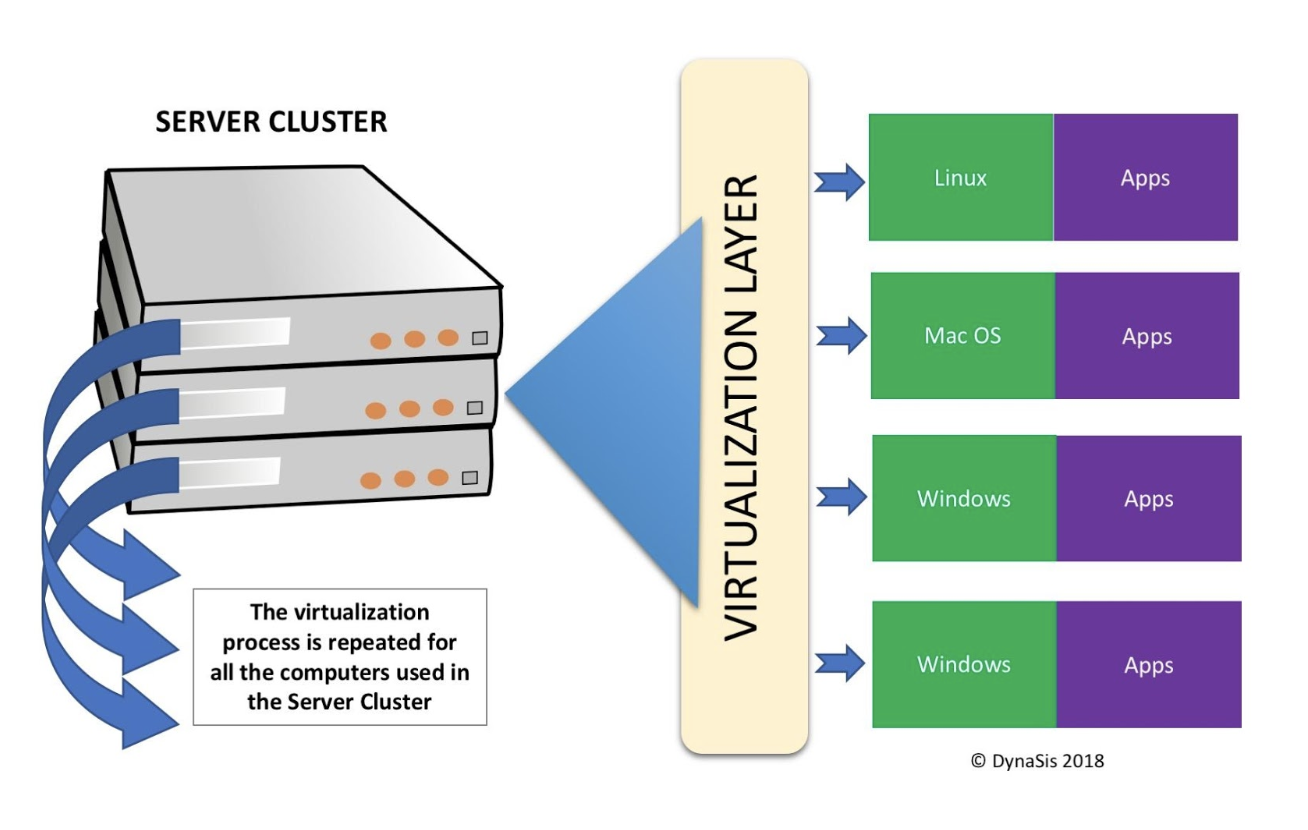

Google Cloud Platform (GCP):

- GCP is Google’s cloud computing offering, providing a robust infrastructure for businesses to harness big data and drive innovation. From scalable storage solutions to powerful data analytics tools, GCP is an embodiment of Google’s commitment to democratizing access to advanced computing resources.

In essence, Google’s mastery of big data is not just a technical feat; it’s a narrative of how data, when wielded with precision, can shape the digital landscape. From refining search experiences to revolutionizing advertising and understanding user behavior, Google’s journey into the realms of big data exemplifies a relentless pursuit of excellence, where each algorithm, each ad, and each data point contributes to an evolving narrative of innovation.

Company 2: Amazon

In the dynamic landscape of e-commerce giants, Amazon stands as an exemplar, utilizing big data as the linchpin in its strategy for personalized recommendations, supply chain optimization, and customer service. This retail juggernaut has ingeniously woven big data analytics into the very fabric of its operations, redefining the customer experience and reshaping the global supply chain.

Revolutionizing Personalized Recommendations:

At the heart of Amazon’s success lies its ability to decode the intricate web of customer preferences through big data analytics. The personalized recommendations engine, a cornerstone of Amazon’s user interface, analyzes vast datasets comprising user browsing history, purchase patterns, and even the duration spent on product pages. This data-driven approach transcends traditional retail models, presenting customers with tailored suggestions that not only enhance their shopping experience but also drive increased sales for the e-commerce giant.

Supply Chain Optimization as the Cornerstone:

Amazon’s supply chain prowess is nothing short of legendary, and big data is the secret sauce that propels its efficiency. Through predictive analytics and real-time data monitoring, Amazon optimizes its supply chain to minimize lead times, reduce costs, and maximize overall operational efficiency. The data-driven approach extends beyond warehousing to encompass demand forecasting, allowing Amazon to anticipate customer needs, manage inventory levels effectively, and orchestrate a supply chain ballet that is the envy of the industry.

Elevating Customer Service through Data:

Customer service is paramount in Amazon’s ethos, and big data is the driving force behind its commitment to excellence in this domain. Amazon leverages customer interaction data to gain insights into user behavior, preferences, and pain points. This enables the company to not only resolve customer queries promptly but also proactively address potential issues. The result is a seamless customer service experience that fosters loyalty and sets a benchmark for the industry.

AWS: The Cloud Powerhouse Fueled by Data:

Touching upon the role of big data in the success of Amazon Web Services (AWS) unveils a narrative of cloud dominance intricately interwoven with data-driven insights.

-

Scalable Infrastructure:

- AWS’s ability to offer scalable infrastructure is underpinned by big data analytics. The dynamic allocation of resources, driven by real-time data analysis, ensures optimal performance and cost efficiency for businesses leveraging the cloud platform.

-

Data Storage and Processing:

- Big data is at the core of AWS’s storage and processing capabilities. From Amazon S3 for secure object storage to Amazon Redshift for data warehousing, AWS’s suite of services is a testament to the company’s prowess in handling massive datasets with unparalleled efficiency.

-

Analytics and Machine Learning:

- AWS’s analytics and machine learning services, such as Amazon Athena and Amazon SageMaker, leverage big data to empower businesses to extract actionable insights and build intelligent applications. The integration of big data with cloud services solidifies AWS’s position as a frontrunner in the cloud computing realm.

In essence, Amazon’s journey with big data goes beyond mere analytics – it’s a strategic imperative that defines the company’s ability to innovate, compete, and lead in the ever-evolving landscape of e-commerce and cloud computing. The symbiotic relationship between Amazon and big data showcases not only the transformative power of analytics but also the enduring impact it can have on redefining industries.

Company 3: Facebook

In the expansive realm of social media dominance, Facebook emerges as a juggernaut, orchestrating a symphony of big data to shape targeted advertising, content personalization, and user engagement. The intricate dance between user-generated content and algorithmic precision has positioned Facebook as a trailblazer in leveraging big data for a myriad of purposes, while simultaneously drawing scrutiny for controversies surrounding privacy concerns.

Targeted Advertising Precision:

Facebook’s utilization of big data in the realm of targeted advertising is nothing short of a strategic masterpiece. Through extensive data mining of user interactions, preferences, and demographic information, Facebook’s advertising algorithms create a bespoke experience for each user. Advertisers benefit from this precision, reaching specific audiences with tailored content that resonates with individual preferences. The result is not just advertisements but personalized engagements that blur the lines between user content and sponsored messages.

Content Personalization at Scale:

The backbone of Facebook’s appeal lies in its ability to curate content that aligns with individual interests. Big data plays a pivotal role in deciphering user behavior, understanding content consumption patterns, and predicting what users might find engaging. The algorithmic wizardry ensures that each user’s feed is a carefully curated tapestry of posts, images, and videos, creating an addictive user experience. In essence, Facebook has mastered the art of personalization at scale, making each user’s journey through the platform a unique and tailored experience.

User Engagement Redefined:

Beyond passive content consumption, Facebook thrives on user engagement, and big data serves as the fuel for this interactive dynamism. Analyzing patterns of user engagement – from likes and shares to comments and reactions – Facebook’s algorithms adapt in real-time to amplify content that resonates with a broader audience. This perpetual loop of data-driven engagement not only retains users on the platform but also ensures a continuous stream of fresh and relevant content.

Controversies and Privacy Concerns:

However, Facebook’s dalliance with big data has not been without its fair share of controversies, predominantly centered around privacy concerns and data mishandling.

-

Cambridge Analytica Scandal:

- One of the most notable controversies involved the unauthorized access and harvesting of user data by Cambridge Analytica. The scandal raised serious questions about Facebook’s data protection measures and triggered a global conversation about the ethical implications of data usage for targeted political advertising.

-

Algorithmic Bias and Filter Bubbles:

- Critics argue that Facebook’s algorithms contribute to the creation of filter bubbles, reinforcing users’ existing beliefs and limiting exposure to diverse perspectives. The algorithmic bias, unintentional or not, raises concerns about the impact of big data on shaping individual worldviews.

-

Privacy Settings and Data Collection:

- Facebook’s evolving privacy settings have faced scrutiny over the years. From concerns about default settings favoring data collection to controversies surrounding third-party app permissions, the company has navigated a delicate balance between personalized user experiences and safeguarding user privacy.

In navigating the complex intersection of big data, targeted advertising, and user engagement, Facebook exemplifies the dual nature of innovation – a powerful tool for personalization and engagement, yet a potential source of ethical quandaries. As the social media landscape evolves, the scrutiny on Facebook’s data practices continues, underlining the ongoing discourse about the responsible use of big data in the digital age.

Company 4: Alibaba

In the vibrant landscape of e-commerce, Alibaba stands as a formidable force, strategically employing big data to revolutionize not only its core e-commerce operations but also logistics and financial services. The intricate dance between data analytics and business operations has positioned Alibaba as a trailblazer, shaping the digital frontier with unparalleled innovation.

Harnessing Big Data in E-commerce:

Alibaba’s e-commerce prowess is intricately interwoven with its adept utilization of big data, transforming the online shopping experience for millions of users.

-

Personalized Shopping Experience:

- Through the analysis of user behavior, preferences, and historical purchase data, Alibaba tailors its platform to provide users with a personalized shopping journey. From product recommendations to targeted promotions, big data algorithms ensure that users encounter a curated selection of products that align with their tastes.

-

Supply Chain Optimization:

- Alibaba optimizes its supply chain through big data analytics, ensuring seamless logistics and efficient inventory management. Real-time data insights enable the company to predict demand, streamline shipping processes, and minimize delivery times, contributing to an unparalleled customer experience.

-

Fraud Prevention and Security:

- Big data plays a crucial role in enhancing security measures on Alibaba’s platform. Advanced analytics identify and prevent fraudulent activities, protecting both buyers and sellers. This commitment to security fosters trust within the Alibaba ecosystem, a cornerstone of its success.

Logistics Revolutionized:

Beyond e-commerce, Alibaba leverages big data to redefine logistics, streamlining operations and elevating efficiency to unprecedented levels.

-

Smart Warehousing:

- Alibaba employs big data in the optimization of warehouse operations. Automated systems powered by data analytics facilitate efficient inventory management, order processing, and even predictive maintenance, contributing to the overall agility of the logistics network.

-

Route Optimization:

- The logistics arm of Alibaba employs big data algorithms to optimize delivery routes, reducing transit times and enhancing the reliability of deliveries. This strategic use of data ensures that the last-mile delivery experience aligns with customer expectations.

Financial Services Empowered:

In the realm of financial services, Alibaba has harnessed big data to pioneer innovative solutions, transcending traditional banking models.

-

Credit Scoring and Risk Management:

- Alibaba’s financial services arm, Ant Group, utilizes big data to assess creditworthiness and manage risks. The analysis of user behavior and transaction history contributes to the development of robust credit scoring models, expanding access to financial services for a broader demographic.

-

Personalized Financial Products:

- Big data analytics enable Alibaba to offer personalized financial products, from microloans to investment opportunities. Tailored offerings based on user data ensure that financial services align with the diverse needs and preferences of Alibaba’s user base.

Singles’ Day and the Big Data Phenomenon:

Alibaba’s Singles’ Day sales event is a testament to the transformative impact of big data on e-commerce.

-

Record-breaking Sales:

- Singles’ Day, a shopping extravaganza originating in China, has become the world’s largest online shopping event. Alibaba leverages big data to anticipate consumer trends, personalize promotions, and optimize inventory, contributing to record-breaking sales figures year after year.

-

Real-time Analytics:

- During Singles’ Day, Alibaba processes vast amounts of real-time data to monitor consumer interactions, track sales performance, and adjust strategies on the fly. This agile response to data insights ensures that the event remains a dynamic and engaging experience for shoppers.

In essence, Alibaba’s mastery of big data extends far beyond conventional e-commerce practices, permeating every facet of its operations. From personalized shopping experiences to logistics optimization and financial innovations, Alibaba’s strategic integration of big data sets a benchmark for the industry. As the digital landscape continues to evolve, Alibaba’s commitment to leveraging data as a catalyst for innovation positions it as a trailblazer in the future of e-commerce and beyond.

Company 5: IBM

In the ever-evolving landscape of big data, IBM stands as a trailblazer, spearheading transformative initiatives through its revolutionary Watson Analytics and cloud-based data solutions. IBM’s foray into big data is not just a technological stride; it’s a narrative of innovation, pushing the boundaries of what’s possible in the data-driven era.

Watson Analytics: Unleashing the Power of Cognitive Computing:

At the forefront of IBM’s big data endeavors is Watson Analytics, a cognitive computing platform that epitomizes the marriage of artificial intelligence and data analytics. This sophisticated tool empowers users to uncover actionable insights from vast datasets without the need for extensive programming expertise.

-

Natural Language Processing (NLP):

- Watson Analytics incorporates NLP, allowing users to interact with data in a conversational manner. This democratization of data analysis ensures that individuals across various domains can harness the power of big data without being data scientists.

-

Predictive Analytics:

- Predictive analytics capabilities embedded within Watson Analytics enable users to forecast trends, identify patterns, and make informed decisions. The platform’s ability to analyze historical data and extrapolate future outcomes has positioned it as a cornerstone for businesses seeking a competitive edge in dynamic markets.

Cloud-Based Data Solutions: Revolutionizing Accessibility and Scalability:

IBM’s commitment to making big data accessible is further exemplified through its cloud-based data solutions. Leveraging the scalability and flexibility of cloud infrastructure, IBM provides a suite of services that cater to the diverse needs of businesses navigating the data landscape.

-

IBM Cloud Pak for Data:

- IBM Cloud Pak for Data is a comprehensive data and AI platform that unifies and simplifies the management of structured and unstructured data. This cloud-native solution fosters collaboration among data professionals, data engineers, and data scientists, ensuring a holistic approach to data-driven decision-making.

-

Hybrid Cloud Deployments:

- IBM’s cloud-based data solutions embrace hybrid cloud deployments, acknowledging the diverse IT environments businesses operate in. This flexibility enables organizations to seamlessly integrate on-premises infrastructure with cloud resources, ensuring a cohesive and adaptable approach to big data management.

Contributions to Big Data Technologies:

IBM’s impact on the development of big data technologies transcends its proprietary solutions, with significant contributions that have shaped the broader ecosystem.

-

Apache Hadoop:

- IBM has been instrumental in the development and support of Apache Hadoop, an open-source framework for distributed storage and processing of large datasets. Hadoop’s scalability owes much to IBM’s contributions, underlining the company’s commitment to advancing foundational technologies in the big data realm.

-

Apache Spark:

- IBM has played a pivotal role in the evolution of Apache Spark, a powerful and fast big data processing engine. Through collaborative efforts and investments in Spark-related projects, IBM has contributed to the enhancement of real-time analytics and machine learning capabilities within the Spark framework.

-

Open Source Initiatives:

- IBM actively participates in and sponsors various open-source initiatives related to big data technologies. This commitment to an open and collaborative approach underscores IBM’s vision for an inclusive and innovative big data ecosystem.

In essence, IBM’s involvement in big data transcends the realm of a technology provider; it is a driving force behind the democratization of data analytics and the continual evolution of foundational technologies. The narrative of Watson Analytics, coupled with IBM’s cloud-based data solutions and contributions to open-source projects, paints a picture of a company deeply committed to shaping the future of big data in a manner that is accessible, scalable, and inherently transformative. As the digital landscape continues to evolve, IBM’s legacy in the big data arena remains an indelible mark on the tapestry of technological innovation.

Company 6: Netflix

In the realm of entertainment, Netflix has emerged as a global powerhouse, seamlessly integrating big data into its fabric to revolutionize content recommendations, gain deep audience insights, and make informed content production decisions. The success of Netflix in the fiercely competitive streaming landscape is not merely a stroke of luck; it is a narrative shaped by the strategic and data-driven decisions that underscore the company’s approach to delivering personalized and compelling content experiences.

Personalized Content Recommendations:

Netflix’s adept use of big data for content recommendations has become synonymous with its brand. The streaming giant employs sophisticated algorithms that analyze vast datasets comprising user viewing history, preferences, and even time spent on particular genres or shows. This meticulous data analysis manifests in a tailored content recommendation system that anticipates user preferences and continually refines its suggestions.

-

Machine Learning Algorithms:

- Netflix leverages machine learning algorithms to decipher intricate patterns in user behavior. These algorithms adapt and evolve, learning from every click, pause, or rewatch, creating a personalized content ecosystem that resonates with individual tastes.

-

Dynamic User Profiles:

- The platform allows users to create multiple profiles within a single account, and each profile becomes a canvas for personalized recommendations. This feature ensures that each member of a household receives content suggestions aligned with their unique viewing habits.

Audience Insights Driving Content Creation:

Netflix’s foray into content production is not just guided by creative intuition; it’s deeply rooted in data-driven insights that reveal audience preferences and trends. Big data serves as a compass, directing Netflix toward the creation of content that captures the zeitgeist and resonates with diverse viewer demographics.

-

Genre Popularity and Trends:

- Through data analysis, Netflix identifies the popularity of specific genres and emerging trends. This insight informs strategic decisions regarding the production of original content, ensuring that the platform remains at the forefront of evolving viewer preferences.

-

Viewer Demographics:

- Netflix delves into demographic data to understand the nuanced preferences of different audience segments. This granular insight shapes content creation strategies, allowing for the development of diverse and inclusive content that caters to a broad spectrum of viewers.

Data-Driven Decision-Making: The Core of Netflix’s Success:

The unparalleled success of Netflix is intrinsically linked to its commitment to data-driven decision-making. Every aspect of the streaming service, from content acquisition to user interface design, reflects a meticulous analysis of user data.

-

Optimizing Content Libraries:

- Netflix optimizes its content libraries based on viewer engagement data. This involves not only acquiring popular titles but also investing in niche content that caters to specific audience segments, creating a comprehensive and diverse entertainment ecosystem.

-

User Interface Optimization:

- The platform’s user interface undergoes continuous refinement based on data insights. Netflix analyzes user interactions to enhance the discoverability of content, ensuring that users can easily navigate through a vast library to find content aligned with their preferences.

-

Global Expansion Strategies:

- Netflix’s global expansion strategies are shaped by data-driven insights into cultural nuances and viewing habits across different regions. This approach allows Netflix to tailor its content offerings and marketing strategies for maximum impact in diverse international markets.

Company 7: Uber

In the fast-paced world of ride-sharing, Uber has seamlessly integrated big data into its operations, transforming the transportation landscape through ride optimization, innovative pricing strategies, and effective driver-partner matching. The use of big data is not just a technological augmentation for Uber; it is the very pulse of its dynamic ecosystem, driving efficiency and enhancing the overall user experience.

Ride Optimization:

Uber’s use of big data for ride optimization is a testament to its commitment to efficiency and seamless user experiences. Leveraging advanced algorithms, the platform analyzes real-time data to optimize routes, reduce wait times, and enhance the overall reliability of its service.

-

Dynamic Route Planning:

- Uber’s algorithms dynamically adjust routes based on real-time traffic data, ensuring that users reach their destinations in the quickest and most efficient manner. This adaptive approach to route planning enhances the reliability of the service, making it a preferred choice for users seeking prompt transportation.

-

Demand Prediction:

- Big data analytics enable Uber to predict and respond to demand fluctuations. By analyzing historical data and considering external factors such as events or weather conditions, Uber anticipates peak demand periods, optimizing driver allocation and ensuring a seamless experience for users.

Pricing Strategies:

Uber’s pricing strategies are intricately tied to big data analytics, allowing for dynamic and responsive fare adjustments. The platform employs a sophisticated pricing model that takes into account various factors, including demand, supply, and external conditions.

-

Surge Pricing Optimization:

- Surge pricing, a controversial but effective strategy employed by Uber during peak demand, is intricately linked to big data. The platform dynamically adjusts prices based on real-time demand patterns, incentivizing more drivers to be available during high-demand periods and ensuring that users have access to rides when they need them the most.

-

Personalized Pricing:

- Uber’s use of big data extends to personalized pricing strategies. The platform analyzes user behavior, location, and historical ride data to offer targeted promotions and discounts, creating a personalized pricing experience that caters to individual preferences.

Driver-Partner Matching:

Big data plays a pivotal role in Uber’s driver-partner matching process, ensuring that the platform efficiently connects drivers with riders based on various criteria, including proximity, availability, and user preferences.

-

Algorithmic Matching:

- Uber’s algorithms use a combination of real-time and historical data to match riders with the most suitable available drivers. This data-driven approach enhances the efficiency of the matching process, reducing wait times for users and optimizing driver utilization.

-

User Ratings and Preferences:

- Big data analytics incorporate user ratings and preferences into the matching algorithm. By considering factors such as a user’s preferred type of vehicle or favorite drivers, Uber enhances the overall user experience by providing tailored and enjoyable rides.

Challenges and Controversies:

While Uber’s use of big data has undeniably transformed the transportation industry, it has not been without challenges and controversies. The platform has faced scrutiny and legal challenges related to privacy concerns, surge pricing practices, and the handling of user data.

-

Privacy Concerns:

- Uber has encountered privacy concerns related to the collection and use of user data. Controversies have arisen over the extent to which the platform tracks user locations and the implications of such data collection on user privacy.

-

Surge Pricing Criticism:

- Surge pricing, while an effective tool for managing demand, has faced criticism for its perceived lack of transparency and the potential exploitation of users during high-demand periods. Striking the right balance between dynamic pricing and user satisfaction remains a challenge.

-

Data Security and Handling:

- Uber has faced challenges in ensuring the security and responsible handling of user data. Data breaches and concerns about the platform’s data security practices have raised questions about the safeguarding of sensitive information.

Company 8: Microsoft

In the realm of technology giants, Microsoft stands as a pioneer in harnessing the power of big data analytics, with a particular focus on its Azure services and the transformative tool, Power BI. Microsoft’s journey into big data is not just an exploration; it is a strategic integration that has redefined how enterprises approach data analytics and derive actionable insights.

Azure Services: Unleashing the Potential of Big Data:

Microsoft Azure, the cloud computing platform by Microsoft, serves as the canvas where the company paints its masterpiece in big data analytics. The platform offers a suite of services tailored to handle vast datasets, implement advanced analytics, and drive innovation across various industries.

-

Azure Data Lake Storage:

- Microsoft Azure Data Lake Storage provides a scalable and secure repository for big data analytics. It allows enterprises to seamlessly ingest, store, and analyze diverse data types, fostering a comprehensive approach to data-driven decision-making.

-

Azure Databricks:

- Azure Databricks, a collaborative analytics platform, empowers data scientists and analysts to perform large-scale data processing and analytics. The platform combines the power of Apache Spark with Azure, enabling real-time data exploration and insights.

Power BI: Transforming Data into Actionable Insights:

At the heart of Microsoft’s big data strategy is Power BI, a business analytics tool that enables users to visualize and share insights across an organization. Power BI goes beyond mere data visualization; it serves as the bridge between raw data and informed decision-making.

-

Intuitive Data Visualization:

- Power BI’s intuitive interface allows users to create interactive and visually compelling dashboards. Through drag-and-drop functionality, users can transform complex datasets into meaningful visualizations, facilitating a deeper understanding of data trends.

-

Integration with Azure Services:

- The seamless integration between Power BI and Azure services amplifies its capabilities. Users can directly connect to Azure data sources, ensuring a smooth flow of data from Azure services to Power BI for analysis and reporting.

Big Data in Enterprise Solutions:

Microsoft’s commitment to integrating big data into enterprise solutions extends across its product ecosystem. From Azure-powered solutions to the integration of big data analytics in Microsoft 365, the company ensures that organizations can unlock the full potential of their data.

-

Advanced Analytics in Microsoft 365:

- Microsoft 365 incorporates advanced analytics features, allowing users to derive insights from their productivity data. Whether it’s analyzing collaboration patterns or identifying areas for process improvement, Microsoft 365 brings the benefits of big data analytics to daily work routines.

-

Azure Synapse Analytics:

- Formerly known as SQL Data Warehouse, Azure Synapse Analytics integrates seamlessly with Power BI and Azure services. It enables organizations to analyze large volumes of data in real-time, fostering a data-driven culture and enabling informed decision-making.

Microsoft’s Impact on the Future of Big Data:

As Microsoft continues to pioneer advancements in big data analytics, its impact on the future of data-driven decision-making is undeniable. The democratization of data through user-friendly tools like Power BI, coupled with the scalability and flexibility of Azure services, positions Microsoft as a catalyst for innovation in the evolving landscape of big data.

Company 9: Tesla

In the automotive realm, Tesla has emerged not just as a manufacturer of electric vehicles but as a trailblazer in the integration of big data, transforming the driving experience, and reshaping the industry landscape. Tesla’s utilization of big data extends beyond conventional boundaries, encompassing autonomous driving, predictive maintenance, and energy optimization, positioning the company at the forefront of automotive innovation.

Autonomous Driving: Navigating the Future with Data Precision:

Tesla’s foray into autonomous driving is deeply intertwined with the power of big data analytics. The company’s fleet of vehicles, equipped with an array of sensors and cameras, continuously gathers vast amounts of data, creating a dynamic and evolving dataset that serves as the foundation for advancing autonomous driving capabilities.

-

Machine Learning Algorithms:

- Tesla employs machine learning algorithms that leverage big data to enhance the capabilities of its Autopilot system. The algorithms analyze real-world driving scenarios, learning from the collective experiences of Tesla vehicles to improve decision-making processes in varied driving conditions.

-

Data-Driven Iterative Improvement:

- Through over-the-air updates, Tesla harnesses big data to implement iterative improvements to its Autopilot functionality. This data-driven approach enables the company to enhance the precision and safety of autonomous features, marking a departure from traditional automotive models.

Predictive Maintenance: Anticipating Needs Through Data Insights:

Tesla’s approach to vehicle maintenance is a testament to the proactive use of big data analytics. The company leverages real-time data from its vehicles to predict and address maintenance needs before they escalate, ensuring optimal performance and longevity.

-

Telemetric Data Analysis:

- Tesla’s vehicles constantly transmit telemetric data, providing insights into various components’ health and performance. By analyzing this data, Tesla can identify potential issues and notify vehicle owners, allowing for timely maintenance and reducing the likelihood of unexpected breakdowns.

-

Remote Diagnostics and Proactive Servicing:

- Big data enables Tesla to remotely diagnose potential issues, often resolving them through software updates. This proactive approach not only enhances the overall reliability of Tesla vehicles but also minimizes the need for physical servicing, providing a seamless and efficient customer experience.

Energy Optimization: Redefining Sustainability in Transportation:

Tesla’s commitment to sustainability extends beyond electric vehicles to energy optimization, where big data plays a pivotal role in maximizing energy efficiency and minimizing environmental impact.

-

Smart Energy Management:

- Through big data analytics, Tesla optimizes energy consumption in its electric vehicles. The data-driven algorithms consider factors such as driving patterns, charging history, and environmental conditions to intelligently manage energy usage, extending the range of electric vehicles.

-

Grid Connectivity and Energy Storage:

- Tesla’s big data initiatives extend to energy solutions such as the Powerwall and Powerpack. By collecting and analyzing data on energy consumption patterns, Tesla enhances the efficiency of its energy storage products, contributing to a more resilient and sustainable energy grid.

The Role of Big Data in Automotive Innovation:

Tesla’s prowess in the automotive industry is inseparable from its innovative use of big data. The company’s relentless pursuit of data-driven solutions has not only redefined the driving experience but has also set a precedent for the broader automotive landscape.

-

Continuous Innovation and Iteration:

- Tesla’s reliance on big data fosters a culture of continuous innovation. The iterative improvements delivered through software updates showcase the company’s commitment to refining and expanding its offerings based on real-world data and user feedback.

-

Industry Influence and Benchmarking:

- Tesla’s innovative use of big data has positioned the company as an industry benchmark. Competitors look to Tesla’s data-driven approach as a standard for integrating technology into the automotive sector, influencing the broader automotive landscape.

Company 10: Spotify

In the dynamic realm of music streaming, Spotify stands as a paragon of innovation, seamlessly integrating big data to redefine the user experience and reshape the music industry landscape. The utilization of big data by Spotify transcends conventional boundaries, encompassing music recommendations, personalized playlists, and user engagement, marking the company as a trailblazer in the intersection of technology and entertainment.

Music Recommendations: Harmonizing Data for Personalized Tunes:

At the heart of Spotify’s success lies its adept use of big data to curate personalized music recommendations. The platform leverages sophisticated algorithms that analyze user behavior, preferences, and historical listening patterns, creating a dynamic and responsive system that adapts to individual tastes.

-

Algorithmic Precision:

- Spotify’s recommendation algorithms utilize a diverse set of data points, including the user’s listening history, genre preferences, and even the time of day. This data-driven approach ensures that recommendations are not only tailored to individual tastes but also evolve over time to reflect changing preferences.

-

Discover Weekly and Release Radar:

- Features like Discover Weekly and Release Radar epitomize Spotify’s mastery in using big data for music discovery. These playlists are meticulously crafted based on user habits and artist affinities, introducing listeners to new tracks that align with their musical inclinations.

Personalized Playlists: Crafting Sonic Journeys with Data Insights:

Spotify’s proficiency in leveraging big data extends to the creation of personalized playlists, allowing users to embark on curated sonic journeys that resonate with their unique preferences.

-

Daily Mixes and Personal Playlists:

- Through the analysis of user data, Spotify generates Daily Mixes that amalgamate favorite tracks with new discoveries, creating a seamless and personalized listening experience. Personal playlists, influenced by user-generated content and collaborative playlists, further exemplify Spotify’s commitment to crafting individualized musical narratives.

-

Data-Driven Collaborations:

- Spotify’s data-centric approach extends to collaborative playlists, where users can collectively contribute to a playlist. By analyzing collaborative playlist data, Spotify enhances the platform’s social and communal aspects, fostering a sense of shared musical exploration.

User Engagement: Orchestrating Interaction Through Data:

The success of Spotify not only hinges on music delivery but also on user engagement, an arena where big data plays a pivotal role. Spotify employs data-driven strategies to enhance user interaction, making the platform a dynamic and immersive musical hub.

-

Behavioral Analytics:

- Spotify meticulously analyzes user behavior, tracking interactions such as likes, skips, and playlist creation. This granular level of behavioral data allows the platform to tailor its interface, recommend relevant content, and refine its overall user experience continually.

-

Podcast and Audio Content Integration:

- Spotify’s foray into podcasts and exclusive audio content demonstrates its strategic use of big data to diversify user engagement. By understanding user interests and preferences, Spotify curates a vast library of podcasts, further enriching the platform’s content ecosystem.

Impact of Data-Driven Algorithms on the Music Streaming Industry:

Spotify’s pioneering use of data-driven algorithms reverberates beyond its individual success, influencing the broader music streaming industry and setting a precedent for the seamless integration of technology and entertainment.

-

Competitive Landscape Shifts:

- Spotify’s data-centric model has spurred a shift in the competitive landscape of the music streaming industry. Competitors now strive to emulate Spotify’s success by investing in robust data analytics to enhance user satisfaction and retention.

-

Evolving Business Models:

- The impact of data-driven algorithms extends to evolving business models within the music streaming industry. As platforms increasingly prioritize personalized content delivery, subscription models gain prominence, emphasizing the value of tailored music experiences.

In conclusion

As a result of our exploration of the field of big data, a tapestry of innovation woven by industry leaders has been revealed. Google, Amazon, and Tesla, among other companies, have embraced big data in order to transform industries. The use of data analytics to improve decision-making, customer experiences, and product development is a proven game-changer.

Consider the path these 10 companies have taken as we reflect on the strategies employed, and it is clear that big data is more than just a tool for making critical business decisions. Organizations have crossed new technological boundaries thanks to the intricate dance between algorithms and insights, transforming how we find, shop, and even commute.

Harnessing and interpreting massive amounts of data is an important component of success in a rapidly changing technology and business landscape. The ever-changing intersection of data and commerce necessitates new innovations, challenges, and ethical considerations. As we bid adieu to this adventure, the question remains – what’s next on the horizon for big data, and which companies will lead the charge into uncharted territory? What’s the next chapter in this data driven adventure?