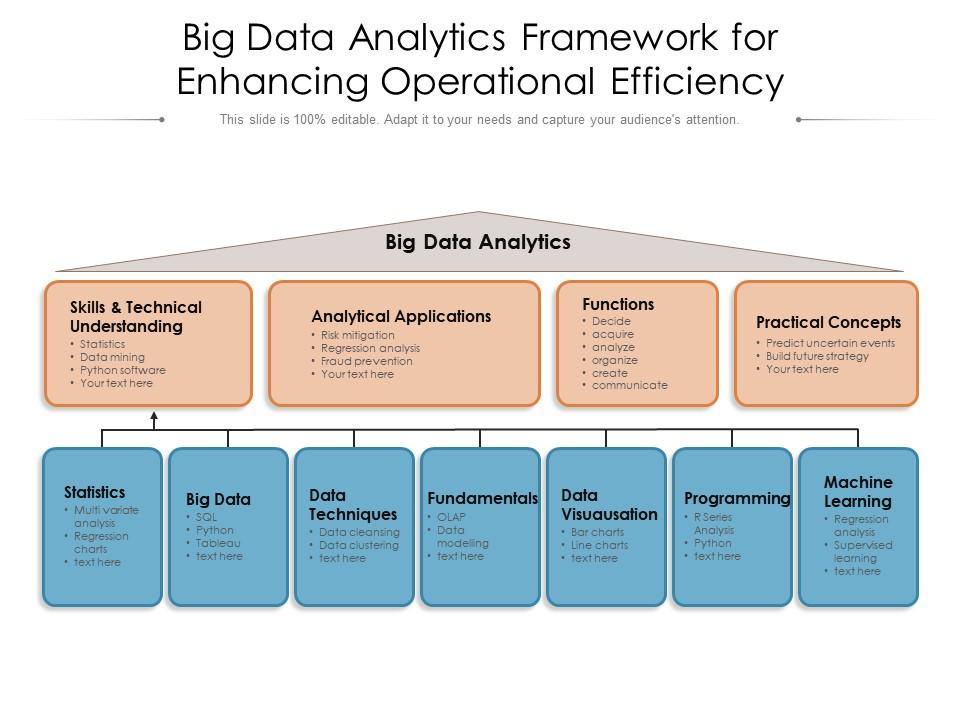

Have you ever wondered if the familiar Excel spreadsheet, a tool synonymous with simplicity, could truly handle the immense challenges posed by big data? This beg the question: Can Excel rise to the occasion and navigate the complexities of big data analytics?

This is a straightforward truth. With its comprehensive set of features and functionalities, it is well positioned to address the challenge of large data. Excel has proven itself as a powerful ally in the field of data management from the very basics to advanced analytics. Excel’s capabilities will be presented in greater depth as we examine them; you will also learn about the challenges it faces when dealing with massive amounts of data.

As we dive into the world of big data exploration, imagine how Excel would evolve into a powerful tool for data analysis, unraveling the mysteries of big data. The journey forward will lead to insights into workarounds, best practices, and real-world case studies that demonstrate how Excel’s exceptional ability to manage large amounts of data can be demonstrated through examples. We’ll look at Excel’s potential in the big data arena by breaking it down into smaller pieces, each of which has the key to unraveling valuable insights.

Excel’s Data Handling Capabilities

In the realm of data handling, Excel emerges not merely as a spreadsheet application but as a powerhouse equipped with versatile capabilities. Delving into its data-handling prowess unveils a spectrum of functionalities, from the fundamental to the advanced, that positions Excel as a formidable tool in the domain of analytics.

A. Basic Features

Excel’s foundational features lay the groundwork for effective data management, encompassing:

-

Rows and Columns: Understanding the Fundamentals

Excel’s grid structure, with its clear division into rows and columns, provides the fundamental framework for organizing and processing data. This structured layout facilitates easy comprehension and manipulation, allowing users to navigate seamlessly through datasets of varying sizes. -

Cell Limits: Excel’s Capacity for Storing Data

Excel boasts a substantial capacity for data storage within individual cells. Understanding these limits is crucial for users dealing with extensive datasets, ensuring that Excel remains a reliable platform for data consolidation without compromising efficiency. -

Importing and Exporting Data: Seamless Integration with Various Formats

Excel’s prowess extends beyond its native environment, offering seamless integration with various data formats. The ability to import and export data ensures compatibility with external sources, fostering a dynamic ecosystem where information flows freely between Excel and other data repositories.

B. Advanced Features

As we ascend to the advanced echelons of Excel’s capabilities, a realm of sophistication unfolds:

-

PivotTables: Analyzing Large Datasets with Ease

PivotTables emerge as a game-changer, especially when confronted with large datasets. This feature empowers users to distill complex information into digestible insights, enabling a granular examination of data relationships and trends. -

Power Query: Streamlining Data Transformation Processes

The Power Query functionality exemplifies Excel’s commitment to efficiency. By streamlining data transformation processes, users can cleanse, shape, and merge data from diverse sources, ensuring a harmonized and polished dataset ready for analysis. -

Data Models: Harnessing Relational Databases within Excel

Excel’s foray into data modeling allows users to harness the power of relational databases. This advanced feature facilitates the creation of robust data relationships, enabling a deeper understanding of how disparate elements interconnect within the dataset.

In navigating Excel’s data handling capabilities, users are not confined to a rigid structure but rather find themselves equipped with a dynamic toolset. Whether mastering the basics for streamlined organization or harnessing advanced features for intricate analyses, Excel proves its mettle as a versatile companion in the data-driven landscape. So, as we unravel the layers of Excel’s capabilities, it becomes evident that beneath its familiar interface lies a sophisticated engine, ready to tackle the challenges posed by diverse and extensive datasets.

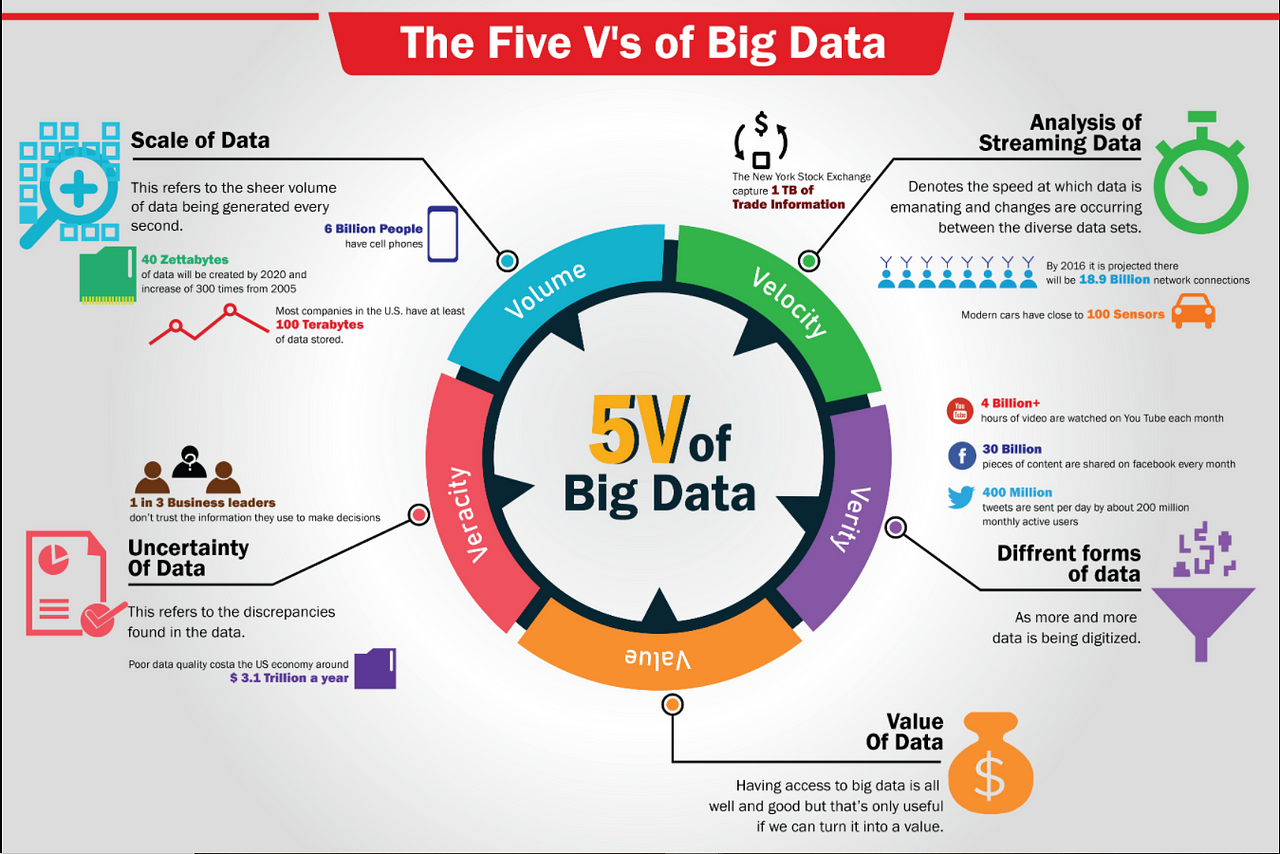

Excel and Big Data: Compatibility Challenges

When delving into the intricate interplay between Excel and big data, one cannot ignore the formidable challenges posed by the sheer scale of information. This exploration is particularly salient when examining the size limitations inherent in Excel’s handling of massive datasets.

A. Size Limitations

Excel, while a stalwart in data management, grapples with identifiable size limitations, including:

-

Identifying Excel’s Limits in Handling Massive Datasets

Unquestionably, Excel exhibits prowess in handling data, but its efficacy falters when tasked with gargantuan datasets. Users must tread carefully, understanding the thresholds beyond which Excel’s capabilities may be stretched to their limits. -

Consequences of Exceeding Size Thresholds

The consequences of surpassing Excel’s size thresholds are profound. Beyond the risk of system slowdowns, users may encounter data truncation or, in extreme cases, application crashes. It becomes imperative for users to gauge the magnitude of their datasets and strategize accordingly to circumvent these pitfalls.

B. Performance Issues

The compatibility challenges extend beyond size limitations, manifesting in performance issues that demand strategic consideration:

-

Sluggish Response Times: Recognizing the Tipping Point

As datasets burgeon, Excel’s responsiveness may wane, leading to sluggish response times. Recognizing the tipping point where Excel transitions from swift data processing to lethargic responsiveness is crucial. Users need to identify the threshold at which data volume starts impinging on operational speed. -

Strategies for Optimizing Excel’s Performance with Big Data

Navigating the performance pitfalls involves implementing strategies tailored to optimize Excel’s functionality with big data. From judiciously employing indexing to utilizing advanced features like data caching, the arsenal of optimization strategies is vast. Each dataset demands a unique approach, emphasizing the need for users to delve into optimization techniques that align with their specific data characteristics.

As we dissect the compatibility challenges between Excel and big data, it becomes apparent that these hurdles are not insurmountable. Instead, they serve as beacons guiding users towards strategic data management. Excel, despite its limitations, emerges as a versatile ally when approached judiciously. By understanding the nuances of size limitations and performance intricacies, users can harness the full potential of Excel in the realm of big data analytics. The journey is one of strategic alignment, where users, armed with knowledge, navigate the data landscape with finesse, extracting insights while mitigating the challenges posed by colossal datasets.

Workarounds and Best Practices

In navigating the intricate landscape of Excel and big data, savvy users often find themselves exploring ingenious workarounds and best practices to overcome inherent challenges. This exploration delves into pragmatic strategies that not only mitigate obstacles but also enhance Excel’s functionality in handling extensive datasets.

A. Data Chunking

-

Breaking Down Large Datasets into Manageable Chunks

The art of data chunking emerges as a strategic workaround for circumventing Excel’s size limitations. By systematically breaking down large datasets into manageable chunks, users navigate the constraints posed by Excel’s capacity thresholds. This practice not only prevents system slowdowns but also fosters a more streamlined analytical process. -

Leveraging Excel’s Features for Chunked Data Analysis

Excel, when wielded adeptly, offers a suite of features tailored for chunked data analysis. From utilizing pivot tables to harnessing Power Query, users can extract meaningful insights from segmented datasets. This approach not only enhances analytical precision but also optimizes resource utilization, ensuring a seamless data analysis experience.

B. External Data Connections

-

Utilizing External Databases with Excel

An indispensable facet of effective big data management lies in the adept utilization of external data connections. Excel’s compatibility extends beyond its native confines, allowing users to tap into external databases seamlessly. This opens avenues for integrating diverse data sources, fostering a holistic approach to analysis. -

Connecting Excel to Cloud-Based Storage Solutions

In an era dominated by cloud technology, Excel adapts to the evolving data landscape by enabling connections to cloud-based storage solutions. This strategic integration ensures that Excel becomes a conduit for real-time data exchange between local environments and cloud repositories, offering users unparalleled flexibility in data sourcing.

C. Third-Party Add-Ins

-

Exploring Add-Ins for Enhancing Excel’s Big Data Capabilities

Third-party add-ins present a realm of possibilities for augmenting Excel’s big data capabilities. From advanced data visualization tools to specialized analytics modules, the market offers a plethora of add-ins designed to elevate Excel’s functionality. Users can explore these add-ins judiciously, selecting tools that align with their specific analytical needs. -

Key Considerations When Choosing Third-Party Tools

While the marketplace is rife with third-party offerings, users must exercise discernment in their selection. Considering factors such as compatibility, scalability, and user reviews becomes paramount. This ensures that the chosen add-ins seamlessly integrate into Excel’s ecosystem, enhancing rather than hindering the analytical workflow.

As users delve into the nuanced realm of workarounds and best practices, a tapestry of strategic approaches unfolds. From the meticulous segmentation of data to the seamless integration of external sources and the judicious exploration of third-party enhancements, Excel enthusiasts find themselves equipped with an arsenal of tools. These strategies not only surmount compatibility challenges but also position Excel as a dynamic and adaptive ally in the ever-evolving landscape of big data analytics.

Case Studies

In the dynamic realm of data management, case studies stand as testament to the practical application of strategies discussed earlier. Real-world examples illuminate the efficacy of leveraging Excel’s capabilities in handling big data, offering invaluable insights into the challenges faced and overcome by organizations.

A. Real-world Examples

-

Highlighting Successful Instances of Excel Handling Big Data

Across industries, Excel has been a stalwart companion in navigating the complexities of big data. Instances abound where organizations have seamlessly integrated Excel into their workflows to handle vast datasets. From financial modeling to intricate project management, Excel emerges as a versatile tool that transcends traditional boundaries. -

Learning from Challenges Faced by Organizations

The journey to harnessing Excel for big data is not without its share of challenges. Organizations grapple with issues ranging from size limitations to performance bottlenecks. These challenges, however, serve as crucibles for innovation. Through a detailed examination of these cases, practitioners glean valuable lessons, adapting strategies to suit their unique contexts.

In the realm of case studies, success stories are woven from the fabric of real-world applications. Take, for instance, a multinational corporation streamlining its inventory management through Excel’s prowess, or a research institution managing and analyzing extensive datasets for groundbreaking discoveries. These narratives not only underscore Excel’s versatility but also demystify the process of overcoming hurdles encountered in the pursuit of efficient data management.

Excel’s role extends beyond being a mere spreadsheet tool; it emerges as a dynamic ally in the ever-evolving landscape of data analytics. As we delve into these case studies, we unravel the intricate tapestry of solutions woven by organizations, each thread representing a strategic decision, a technological adaptation, or a triumphant moment in the face of data challenges. These real-world examples serve not only as a source of inspiration but also as pragmatic guides for those charting their course in the vast ocean of big data analytics.

Excel’s Future in Big Data

VI. Excel’s Future in Big Data: Navigating the Horizon of Possibilities

As we stand at the crossroads of technological evolution, Excel’s trajectory in the realm of big data beckons us towards a future marked by innovation and adaptability. The landscape of data management is in perpetual flux, and Excel, the stalwart spreadsheet software, is not immune to this transformation. Here’s a glimpse into what lies ahead:

Potential Enhancements and Updates to Accommodate Larger Datasets

-

Scaling Capacities: Excel’s commitment to handling data is poised to evolve. Anticipate enhancements that will enable the software to seamlessly accommodate even larger datasets. With organizations grappling with ever-expanding data volumes, Excel’s responsiveness to this demand signals a future where size limitations become a distant memory.

-

Streamlined Integration: Future updates may bring forth features that facilitate the seamless integration of Excel with diverse data sources. Imagine a scenario where Excel effortlessly ingests and analyzes data from varied platforms, transcending the current boundaries of compatibility.

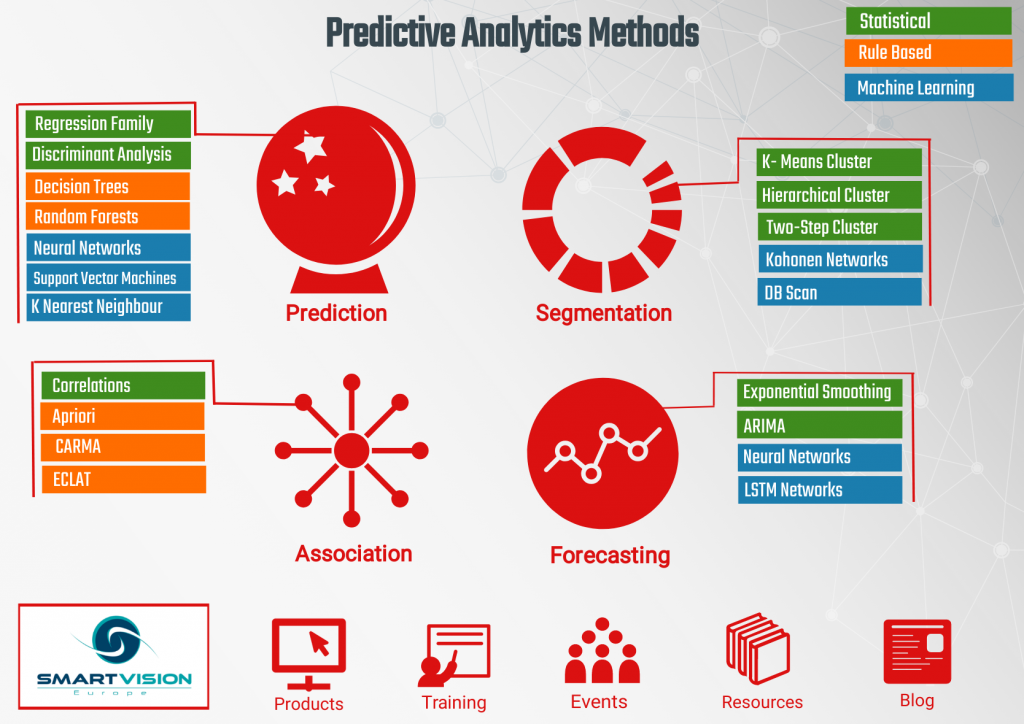

The Role of Excel in the Era of Advanced Analytics and Artificial Intelligence

-

Intelligent Automation: Excel is set to embrace the era of advanced analytics and artificial intelligence (AI). Envision a landscape where Excel’s functionalities are augmented by AI algorithms, offering predictive insights and automating complex data transformations. This synergy positions Excel not merely as a tool but as an intelligent assistant in the data analytics ecosystem.

-

Decision Support System: As organizations delve deeper into analytics, Excel is poised to evolve into a decision support system. Picture Excel not just as a repository of data but as a dynamic platform that aids in informed decision-making. Whether through predictive modeling or scenario analysis, Excel’s role becomes pivotal in shaping strategic choices.

In the tapestry of Excel’s future, we see threads of innovation, adaptability, and a relentless pursuit of efficiency. The software transcends its traditional identity, emerging as a dynamic player in the ever-expanding arena of big data analytics. The roadmap ahead foretells a narrative where Excel is not just a tool but a strategic partner in navigating the complexities of data management.

As we embrace the future of Excel in big data, the keywords of evolution, scalability, and intelligent analytics resonate. These are not mere speculations but informed projections grounded in the trajectory of technological advancement. Excel’s journey continues, and with each update, it fortifies its standing as an indispensable companion in the ever-evolving landscape of data analytics.